為保證單一機器故障時同一分區的多數派副本可用,OceanBase數據庫會保證同一個分區的多個副本不調度在同一台機器上。由於同一個分區的副本分佈在不同的Zone/Region下,在城市級災難或者數據中心故障時既保證了數據的可靠性,又保證了數據庫服務的可用性,達到可靠性與可用性的平衡。OceanBase數據庫創新的容災能力有三地五中心可以無損容忍城市級災難,以及同城三中心可以無損容忍數據中心級故障。下面分別展示了這兩種部署模式的形式。

視頻講解如下:

https://www.bilibili.com/video/BV1PiaXzHEiA/?aid=115165924233...

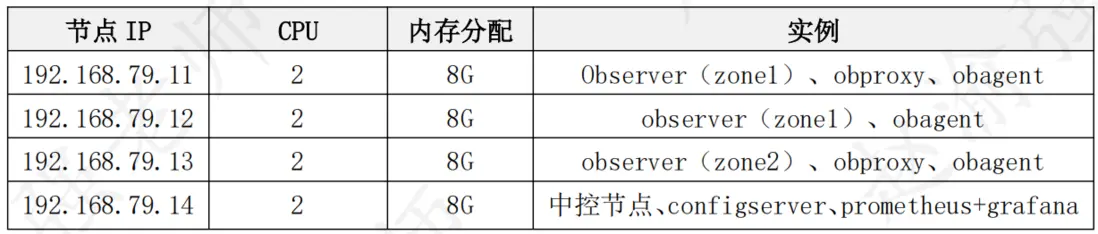

下面將以在多機上部署OceanBase集羣社區版為例來演示如何部署OceanBase全分佈式集羣。下表展示了OceanBase全分佈式集羣中包含的實例信息。

OceanBase全分佈式集羣是真正用於生產環境的集羣模式,它是指在多機上部署生產環境的分佈式集羣。

(1)在中控機上在線安裝obd

# 如果宿主機可以連接網絡,可執行如下命令在線安裝。

bash -c "$(curl -s https://obbusiness-private.oss-cn-shanghai.aliyuncs.com/download-center/opensource/oceanbase-all-in-one/installer.sh)"

# 如果/tmp目錄空間不足,會導致下載文件無法寫入。解決方案如下:

# 修改文件/etc/fstab,增加如下內容:

# tmpfs /tmp tmpfs nodev,nosuid,size=5G 0 0(2)安裝成功後將輸出下面的信息

##########################################################################

Install Finished

==========================================================================

Setup Environment: source ~/.oceanbase-all-in-one/bin/env.sh

Quick Start: obd demo

Use Web Service to install: obd web

Use Web Service to upgrade: obd web upgrade

More Details: obd -h

==========================================================================(3)執行下面的語句生效環境變量

source ~/.oceanbase-all-in-one/bin/env.sh

# 安裝成功後,/tmp 目錄下會新增tmp.xxx文件夾作為安裝目錄,如:tmp.66RxuoJG0f。(4)在中控節點上配置到各節點的免密碼登錄

# 生成密鑰對。

ssh-keygen -t rsa

# 複製公鑰文件。

ssh-copy-id -i .ssh/id_rsa.pub root@192.168.79.11

ssh-copy-id -i .ssh/id_rsa.pub root@192.168.79.12

ssh-copy-id -i .ssh/id_rsa.pub root@192.168.79.13

ssh-copy-id -i .ssh/id_rsa.pub root@192.168.79.14

# 驗證免密碼登錄。

ssh 192.168.79.11

# 此時不需要輸入密碼即可登錄當前主機。(5)編輯部署描述文件all-components.yaml,內容如下:

# 注意:由於OceanBase中每個observer最小需要6G內存,因此需要保證虛擬機能夠提供足夠內存。可以將每台運行observer的虛擬機內存設置為8G。

user:

username: root

password: Welcome_7788

port: 22

oceanbase-ce:

depends:

- ob-configserver

servers:

- name: server1

ip: 192.168.79.11

- name: server2

ip: 192.168.79.12

- name: server3

ip: 192.168.79.13

global:

cluster_id: 1

memory_limit: 6G

system_memory: 1G

datafile_size: 10G

log_disk_size: 5G

cpu_count: 2

production_mode: false

enable_syslog_wf: false

max_syslog_file_count: 4

root_password: Welcome_1

server1:

mysql_port: 2881

rpc_port: 2882

obshell_port: 2886

home_path: /root/observer

data_dir: /root/obdata

redo_dir: /root/redo

zone: zone1

server2:

mysql_port: 2881

rpc_port: 2882

obshell_port: 2886

home_path: /root/observer

data_dir: /root/obdata

redo_dir: /root/redo

zone: zone1

server3:

mysql_port: 2881

rpc_port: 2882

obshell_port: 2886

home_path: /root/observer

data_dir: /root/obdata

redo_dir: /root/redo

zone: zone2

obproxy-ce:

depends:

- oceanbase-ce

- ob-configserver

servers:

- 192.168.79.11

- 192.168.79.13

global:

listen_port: 2883

prometheus_listen_port: 2884

home_path: /root/obproxy

enable_cluster_checkout: false

skip_proxy_sys_private_check: true

enable_strict_kernel_release: false

obproxy_sys_password: 'Welcome_1'

observer_sys_password: 'Welcome_1'

obagent:

depends:

- oceanbase-ce

servers:

- name: server1

ip: 192.168.79.11

- name: server2

ip: 192.168.79.12

- name: server3

ip: 192.168.79.13

global:

home_path: /root/obagent

prometheus:

servers:

- 192.168.79.14

depends:

- obagent

global:

home_path: /root/prometheus

grafana:

servers:

- 192.168.79.14

depends:

- prometheus

global:

home_path: /root/grafana

login_password: 'Welcome_1'

ob-configserver:

servers:

- 192.168.79.14

global:

listen_port: 8080

home_path: /root/ob-configserver

(6)執行命名部署集羣

obd cluster deploy myob-cluster -c all-components.yaml

# myob-cluster為集羣的名稱。(7)部署完成後,執行命令啓動OceanBase

obd cluster start myob-cluster

# 啓動成功後將輸出下面的信息:

......

+------------------------------------------------------------------+

| ob-configserver |

+---------------+------+---------------+----------+--------+-------+

| server | port | vip_address | vip_port | status | pid |

+---------------+------+---------------+----------+--------+-------+

| 192.168.79.14 | 8080 | 192.168.79.14 | 8080 | active | 51742 |

+---------------+------+---------------+----------+--------+-------+

curl -s 'http://192.168.79.14:8080/services?Action=GetObProxyConfig'

Connect to observer 192.168.79.11:2881 ok

Wait for observer init ok

+-------------------------------------------------+

| oceanbase-ce |

+---------------+---------+------+-------+--------+

| ip | version | port | zone | status |

+---------------+---------+------+-------+--------+

| 192.168.79.11 | 4.3.5.1 | 2881 | zone1 | ACTIVE |

| 192.168.79.12 | 4.3.5.1 | 2881 | zone1 | ACTIVE |

| 192.168.79.13 | 4.3.5.1 | 2881 | zone2 | ACTIVE |

+---------------+---------+------+-------+--------+

obclient -h192.168.79.11 -P2881 -uroot -p'Welcome_1' -Doceanbase -A

cluster unique id: ca2bc58a-5296-598f-8c3c-89efb5210f03-195d6f47d1f-01050304

Connect to obproxy ok

+-------------------------------------------------------------------+

| obproxy-ce |

+---------------+------+-----------------+-----------------+--------+

| ip | port | prometheus_port | rpc_listen_port | status |

+---------------+------+-----------------+-----------------+--------+

| 192.168.79.11 | 2883 | 2884 | 2885 | active |

| 192.168.79.13 | 2883 | 2884 | 2885 | active |

+---------------+------+-----------------+-----------------+--------+

obclient -h192.168.79.11 -P2883 -uroot@proxysys -p'Welcome_1' -Doceanbase -A

Connect to Obagent ok

+------------------------------------------------------------------+

| obagent |

+---------------+--------------------+--------------------+--------+

| ip | mgragent_http_port | monagent_http_port | status |

+---------------+--------------------+--------------------+--------+

| 192.168.79.11 | 8089 | 8088 | active |

| 192.168.79.12 | 8089 | 8088 | active |

| 192.168.79.13 | 8089 | 8088 | active |

+---------------+--------------------+--------------------+--------+

Connect to Prometheus ok

+---------------------------------------------------------+

| prometheus |

+---------------------------+-------+------------+--------+

| url | user | password | status |

+---------------------------+-------+------------+--------+

| http://192.168.79.14:9090 | admin | ucws4ExTcX | active |

+---------------------------+-------+------------+--------+

Connect to grafana ok

+-------------------------------------------------------------------+

| grafana |

+---------------------------------------+-------+----------+--------+

| url | user | password | status |

+---------------------------------------+-------+----------+--------+

| http://192.168.79.14:3000/d/oceanbase | admin | admin | active |

+---------------------------------------+-------+----------+--------+

myob-cluster running

......