預訓練模型蒸餾

在前面的課程中,大家瞭解了自然語言處理領域中一些經典的模型,比如BERT、ERNIE等,它們在NLP任務中的強大之處是毫無疑問的,但由於預訓練模型的參數較多,體積龐大,在部署時對設備的運算速度和內存大小以及能耗都有着極高的要求。但當我們處理實際的產業應用需求時,比如將深度學習模型部署到手機上時,就需要對模型進行壓縮,在不影響性能的前提下使其變得體積更小、速度更快、能耗更低。本節課我們會先對預訓練模型蒸餾中幾個比較經典的模型進行介紹,比如:Patient-KD、DistilBERT、TinyBERT和DynaBERT。從原理和結構上對以上幾個模型進行詳解。然後再通過一個實驗案例,帶領大家使用DynaBERT訓練策略中寬度自適應部分來對TinyBERT在GLUE基準數據集的QQP任務中進行蒸餾,以此進行實際效果驗證。

- 更多CV和NLP中的transformer模型(BERT、ERNIE、ViT、DeiT、Swin Transformer等)、深度學習資料,請參考:awesome-DeepLearning

- 瞭解並使用更多模型壓縮相關工具,請參考:PaddleSlim

模型壓縮簡介

模型壓縮方法主要可以分為以下四類:

- 參數修剪和量化(Parameter pruning and quantization):用於消除對模型表現影響不大的冗餘參數。早期工作表明,網絡修剪和量化在降低網絡複雜性和解決過擬合問題上是有效的。它可以為神經網絡帶來正則化效果從而提高泛化能力。參數修剪和量化可以進一步分為三類:量化和二值化,網絡剪枝和結構化矩陣。量化可以看作是“量子級別的減肥”,神經網絡模型的參數一般都用float32的數據表示,但如果我們將float32的數據計算精度變成int8的計算精度,則可以犧牲一點模型精度來換取更快的計算速度。而剪枝則類似“化學結構式的減肥”,將模型結構中對預測結果不重要的網絡結構剪裁掉,使網絡結構變得更加 ”瘦身“。比如,在每層網絡,有些神經元節點的權重非常小,對模型加載信息的影響微乎其微。如果將這些權重較小的神經元刪除,則既能保證模型精度不受大影響,又能減小模型大小。結構化矩陣則是用少於

個參數來描述一個

- 低秩分解(Low-rank factorization):卷積神經網絡中的主要計算量在於卷積計算,而卷積計算本質上是矩陣分析問題,因此可以通過對多維矩陣進行分解的方式,用多個低秩矩陣來逼近該矩陣,比如將一個3D卷積轉換為3個1D卷積,從而降低參數複雜度和運算複雜度。

- 遷移/壓縮卷積濾波器(Transferred/compact convolutional filters):通過構造特殊結構的卷積濾波器來降低存儲空間、減小計算複雜度。

- 知識蒸餾(Knowledge distillation):類似“老師教學生”,使用一個效果好的大模型指導一個小模型訓練,因為大模型可以提供更多的軟分類信息量,所以會訓練出一個效果接近大模型的小模型。

在本節課中,我們主要講述以知識蒸餾的方法對BERT(transformer-based)模型進行壓縮。

知識蒸餾

2014年,Geoffrey Hinton在 Distilling the Knowledge in a Neural Network 中提出知識蒸餾(KD)概念:把從一個複雜的大模型(Teacher Network)上學習到的知識遷移到另一個更適合部署的小模型上(Student Network),叫知識蒸餾。

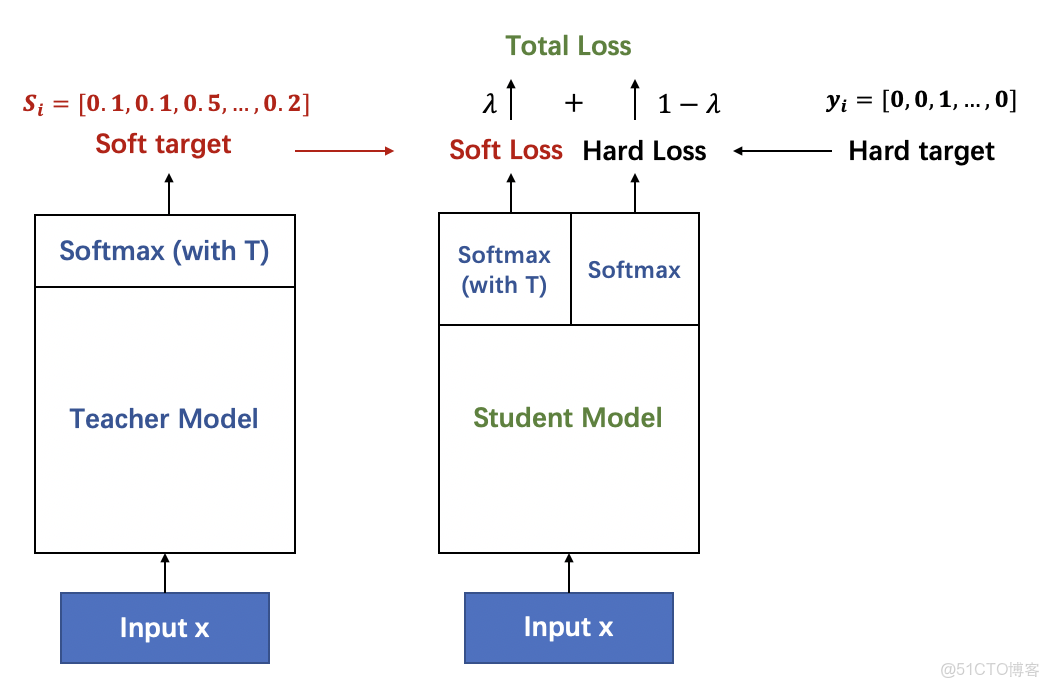

知識蒸餾結構

如上圖所示,左邊的教師網絡是一個複雜的大模型,以它帶有温度參數T的softmax輸出作為軟目標作為學生網絡學習的軟目標。學生網絡在學習時,也通過帶有温度參數T的softmax進行概率分佈預測,與軟目標計算soft loss。同時,也通過正常的訓練流程獲得預測的樣本類別與真實的樣本類別計算hard loss。最終根據

其中,知識蒸餾過程中涉及到的兩種標籤分別是:

- 硬標籤(hard target):網絡訓練的目標,即分類任務中正確分類的label,正標籤為1,其餘標籤都為0;

- 軟標籤(soft target):大模型的softmax層輸出的類別概率,正標籤的概率最高。

論文中提出的softmax函數中增加了温度(Temperature)這個參數,其公式如下:

其中,T代表温度。原始的softmax函數就是

舉一個例子,假設一個三分類問題,

當

可以看到,T越高,softmax的類分佈概率會變得越平滑。這就使得學生網絡可以學習到教師網絡對負標籤歸納的信息。

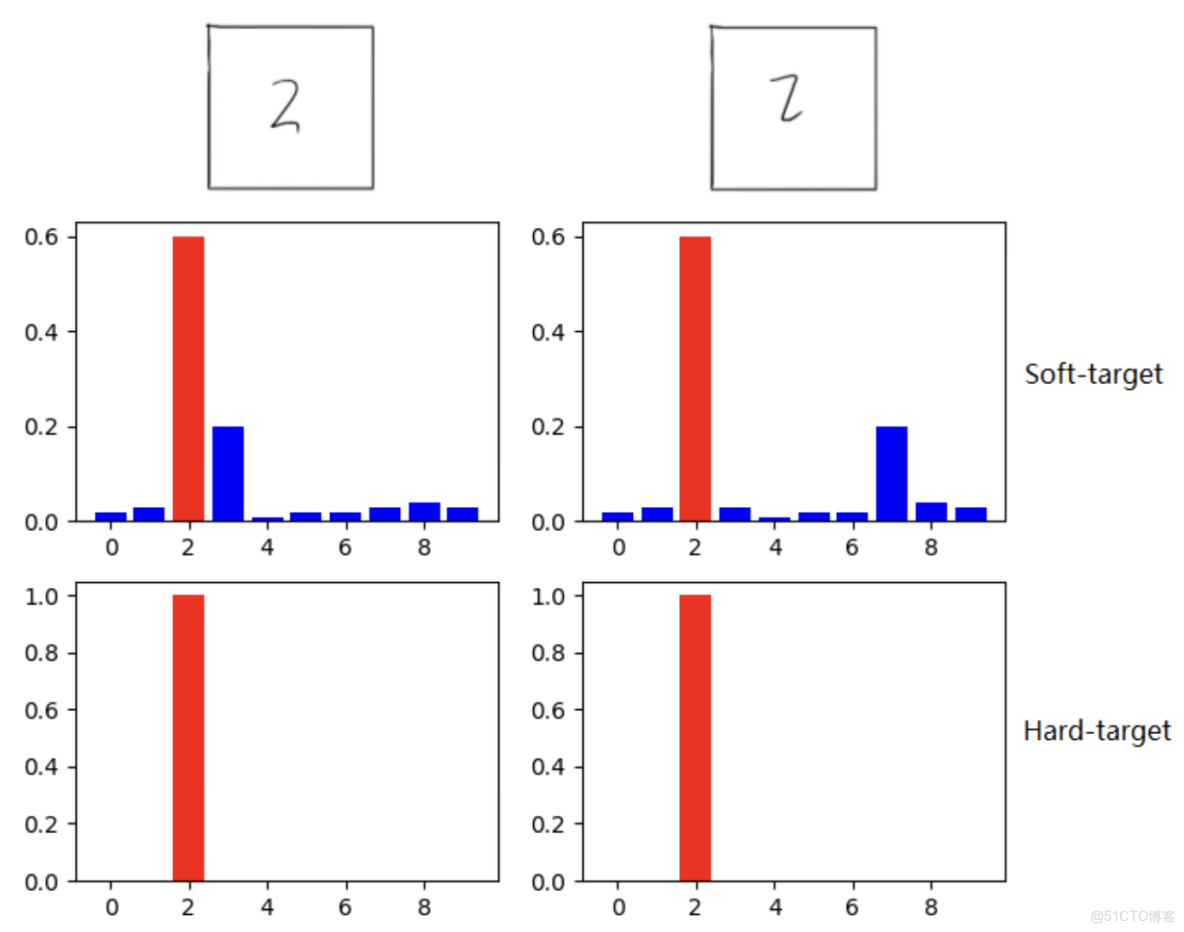

手寫數字識別任務(圖來源於參考文獻3)

舉個例子,如上圖所示,在用MNIST數據集做手寫數字識別任務時,某個輸入的“2”更加類似“3”,則softmax的輸出值中“3”對應的概率應該要比其他負標籤類別高;而另一個“2”更類似於“7”,則這個這個樣本的softmax輸出值中“7”對應的概率應該比其他負標籤類別高。這兩個“2”對應的hard target是相同的,但是他們的soft target是不同的,soft target內藴含着更多的信息。

Patient-KD

Patient-KD 算法綜述

論文地址:Patient Knowledge Distillation for BERT Model Compression

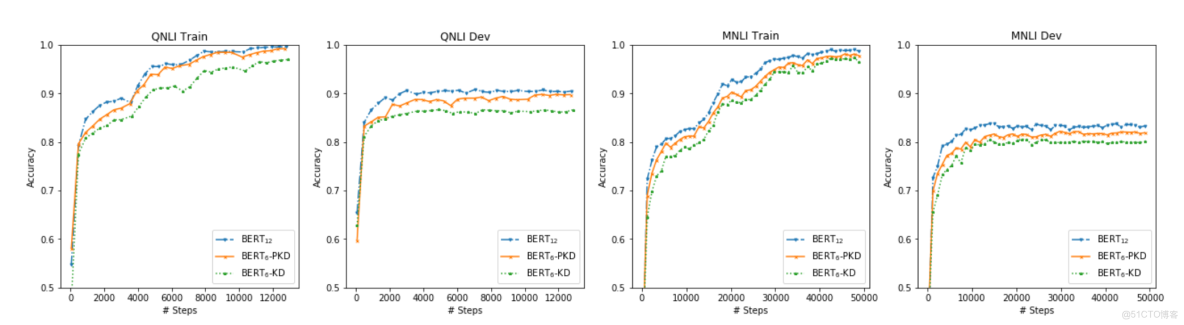

圖1: Vanilla KD和PKD比較

BERT預訓練模型對資源的高需求導致其很難被應用在實際問題中,為緩解這個問題,論文中提出了Patient Knowledge Distillation(Patient KD)方法,將原始大模型壓縮為同等有效的輕量級淺層網絡。同時,作者對以往的知識蒸餾方法進行了調研,如圖1所示,vanilla KD在QNLI和MNLI的訓練集上可以很快的達到和teacher model相媲美的性能,但在測試集上則很快達到飽和。對此,作者提出一種假設,在知識蒸餾的過程中過擬合會導致泛化能力不良。為緩解這個問題,論文中提出一種“耐心”師生機制,即讓Patient-KD中的學生模型從教師網絡的多箇中間層進行知識提取,而不是隻從教師網絡的最後一層輸出中學習,該學習方法遵循以下兩個策略:

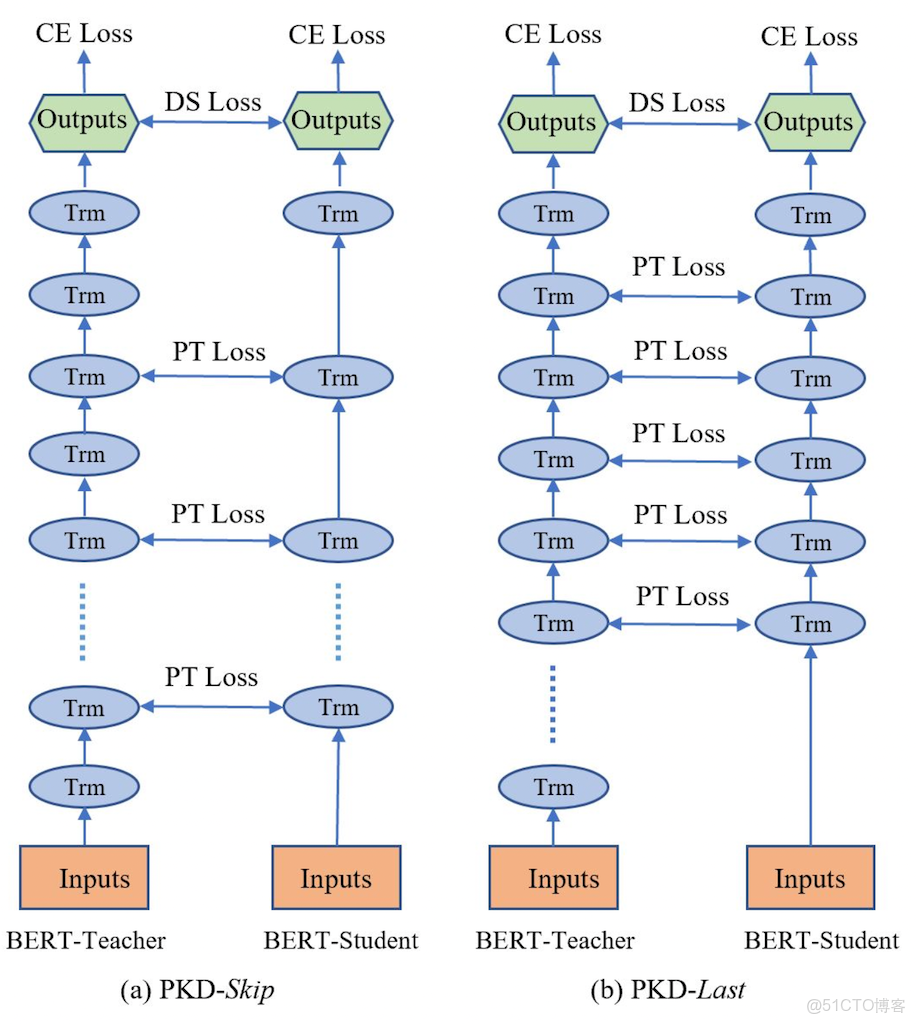

- PKD-Skip: 從每k層學習,假設教師網絡的底層和高層中都包含重要信息,需要被學習到(如圖2a所示)

- PKD-Last: 從最後k層學習,假設教師網絡越靠後的層包含越豐富的知識信息(如圖2b所示)

圖2a: PKD-Skip 學生網絡學習教師網絡每兩層的輸出 圖2b: PKD-Last 學生網絡從教師網絡的最後六層學習

因為在BERT中僅使用最後一層的[CLS] token的輸出來進行預測,且在其他BERT的變體模型中,如SDNet,是通過對每一層的[CLS] embedding的加權平均值進行處理並預測。由此可以推斷,如果學生模型可以從任何教師網絡中間層中的[CLS]表示中學習,那麼它就有可能獲得類似教師網絡的泛化能力。

因此,Patient-KD中提出特殊的一種損失函數的計算方式:

其中,對於輸入

同時,Patient-KD中也使用了

最終的目標損失函數可以表示為:

實驗結果

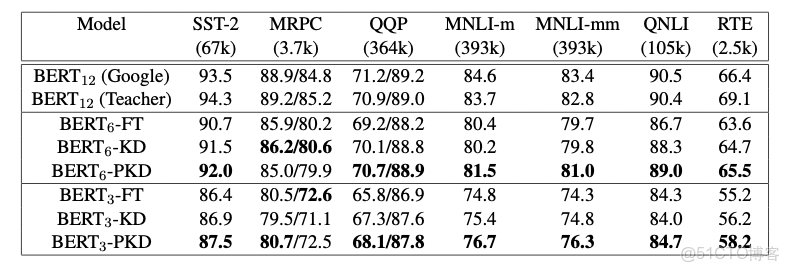

圖3: results from the GLUE test server

作者將模型預測提交到GLUE並獲得了在測試集上的結果,如圖3所示。與fine-tuning和vanilla KD這兩種方法相比,使用PKD訓練的

圖4: PKD-Last 和 PKD-Skip 在GLUE基準上的對比

儘管這兩種策略都比vanilla KD有所改進,但PKD-Skip的表現略好於PKD-Last。作者推測,這可能是由於每k層的信息提煉捕獲了從低級到高級的語義,具備更豐富的內容和更多不同的表示,而只關注最後k層往往會捕獲相對同質的語義信息。

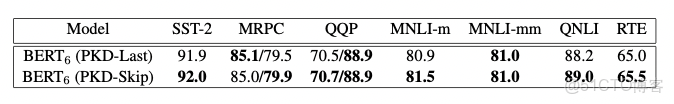

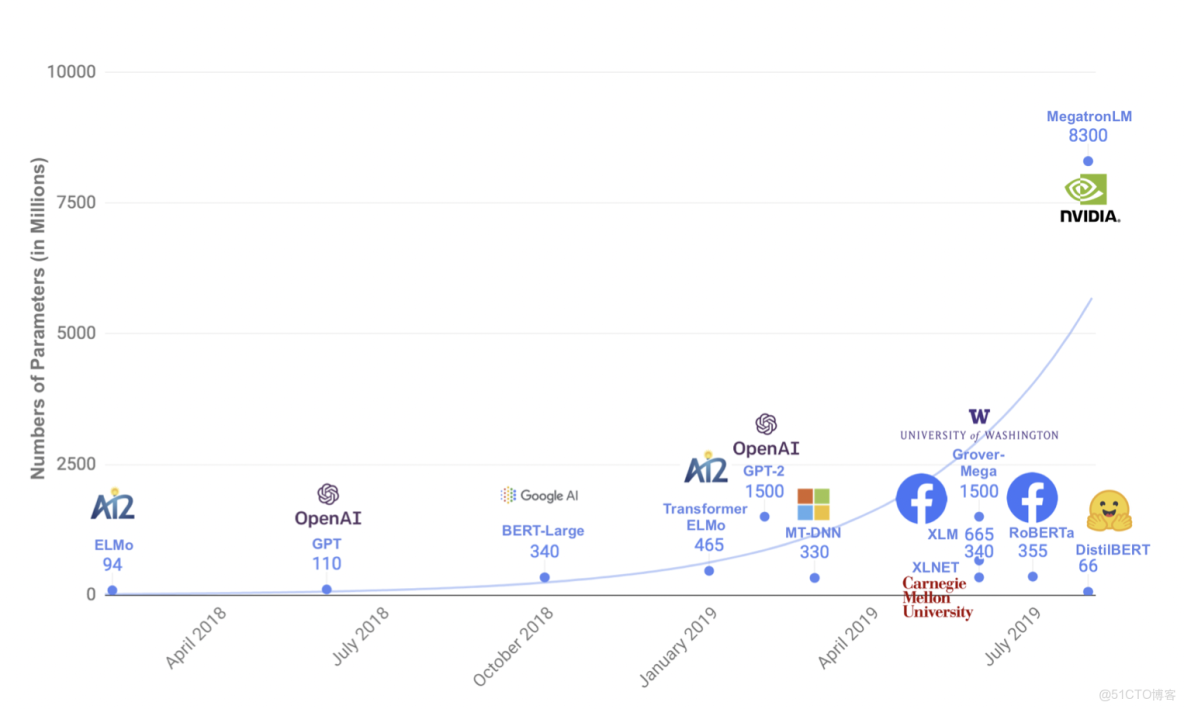

圖5: 參數量和推理時間對比

圖5展示了

DistilBERT

DistilBERT 算法綜述

論文地址:DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter

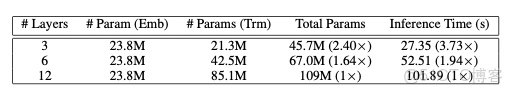

圖1: 幾個預訓練模型的參數量統計

近年來,大規模預訓練語言模型成為NLP任務的基本工具,雖然這些模型帶來了顯著的改進,但它們通常擁有數億個參數(如圖1所示),而這會引起兩個問題。首先,大型預訓練模型需要的計算成本很高。其次,預訓練模型不斷增長的計算和內存需求可能會阻礙語言處理應用的廣泛落地。因此,作者提出DistilBERT,它表明小模型可以通過知識蒸餾從大模型中學習,並可以在許多下游任務中達到與大模型相似的性能,從而使其在推理時更輕、更快。

學生網絡結構

學生網絡DistilBERT具有與BERT相同的通用結構,但token-type embedding和pooler層被移除,層數減半。學生網絡通過從教師網絡中每兩層抽取一層來進行初始化。

Training loss

其中,

同時使用了Hinton在2015年提出的softmax-temperature:

其中,

最終的loss函數為

實驗結果

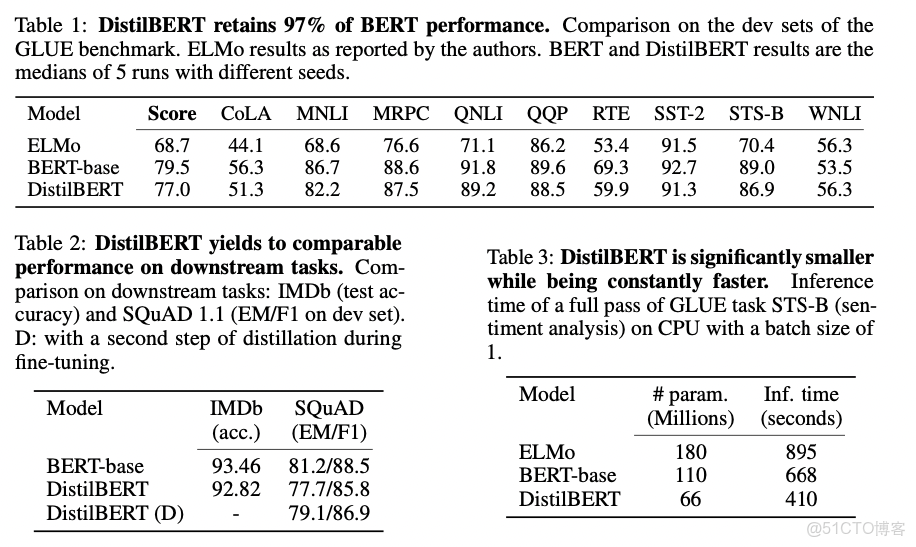

圖2:在GLUE數據集上的測試結果、下游任務測試和參數量對比

根據上圖我們可以看到,DistilBERT與BERT相比減少了40%的參數,同時保留了BERT 97%的性能,但提高了60%的速度。

TinyBERT

TinyBERT 算法綜述

論文地址:TinyBERT: Distilling BERT for Natural Language Understanding

TinyBERT是由華中科技大學和華為諾亞方舟實驗室在2019年聯合提出的一種針對transformer-based模型的知識蒸餾方法,以BERT為例對大型預訓練模型進行研究。四層結構的

圖1: TinyBERT learning

TinyBERT主要做了以下兩點創新:

- 提供一種新的針對 transformer-based 模型進行蒸餾的方法,使得BERT中具有的語言知識可以遷移到TinyBERT中去。

- 提出一個兩階段學習框架,在預訓練階段和fine-tuning階段都進行蒸餾,確保TinyBERT可以充分的從BERT中學習到一般領域和特定任務兩部分的知識。

Transformer Distillation

假設TinyBERT有M層transformer layer,teacher BERT有N層transformer layer,則需要從teacher BERT的N層中抽取M層用於transformer層的蒸餾。

其中

TinyBERT的蒸餾分為以下三個部分:transformer-layer distillation、embedding-layer distillation、prediction-layer distillation。

Transformer-layer distillation

Transformer-layer的蒸餾由attention based蒸餾和hidden states based蒸餾兩部分組成。

圖2: Transformer-layer distillation

其中,attention based蒸餾是受到論文Clack et al., 2019的啓發,這篇論文中提到,BERT學習的注意力權重可以捕獲豐富的語言知識,這些語言知識包括對自然語言理解非常重要的語法和共指信息。因此,TinyBERT提出attention based蒸餾,其目的是使學生網絡很好地從教師網絡處學習到這些語言知識。具體到模型中,就是讓TinyBERT網絡學習擬合BERT網絡中的多頭注意力矩陣,目標函數定義如下:

其中,

hidden states based蒸餾是對transformer層輸出的知識進行了蒸餾處理,目標函數定義為:

其中,

Embedding-layer Distillation

Embedding loss和hidden states loss同理,其中

Prediction-layer Distillation

其中,

對上述三個部分的loss函數進行整合,則可以得到教師網絡和學生網絡之間對應層的蒸餾損失如下:

KaTeX parse error: No such environment: equation at position 8: \begin{̲e̲q̲u̲a̲t̲i̲o̲n̲}̲ L_{layer} = \…

實驗結果

圖3: Results evaluated on GLUE benchmark

作者在GLUE基準上評估了TinyBERT的性能,模型大小、推理時間速度和準確率如圖3所示。實驗結果表明,TinyBERT在所有GLUE任務上都優於

DynaBERT

DynaBERT 算法綜述

論文地址:DynaBERT: Dynamic BERT with Adaptive Width and Depth

近年的模型壓縮方式基本上都是將大型的BERT網絡壓縮到一個固定的小尺寸網絡。而實際工作中,不同的任務對推理速度和精度的要求不同,有的任務可能需要四層的壓縮網絡而有的任務會需要六層的壓縮網絡。DynaBERT(dynamic BERT)提出一種不同的思路,它可以通過選擇自適應寬度和深度來靈活地調整網絡大小,從而得到一個尺寸可變的網絡。

DynaBERT的訓練階段包括兩部分,首先通過知識蒸餾的方法將teacher BERT的知識遷移到有自適應寬度的子網絡student

圖1: DynaBERT的訓練過程

寬度自適應 Adaptive Width

一個標準的transformer中包含一個多頭注意力(MHA)模塊和一個前饋網絡(FFN)。在論文中,作者通過變換注意力頭的個數

為了充分利用網絡的容量,更重要的頭部或神經元應該在更多的子網絡中共享。因此,在訓練寬度自適應網絡前,作者在 fine-tuned BERT網絡中根據注意力頭和神經元的重要性對它們進行了排序,然後在寬度方向上以降序進行排列。這種選取機制被稱為 Network Rewiring。

圖2: Network Rewiring

那麼,要如何界定注意力頭和神經元的重要性呢?作者參考 P. Molchanov et al., 2017 和 E. Voita et al., 2019 兩篇論文提出,去掉某個注意力頭或神經元前後的loss變化,就是該注意力頭或神經元的重要程度,變化越大則越重要。

訓練寬度自適應網絡

首先,將BERT網絡作為固定的教師網絡,並初始化

模型蒸餾的loss定義為:

其中,

訓練深度自適應網絡

訓練好寬度自適應的

模型蒸餾的loss定義為:

實驗結果

根據不同的寬度和深度剪裁係數,作者最終得到12個大小不同的DyneBERT模型,其在GLUE上的效果如下:

圖3: results on GLUE benchmark

圖4:Comparison of #parameters, FLOPs, latency on GPU and CPU between DynaBERT and DynaRoBERTa and other methods.

可以看到論文中提出的DynaBERT和DynaRoBERTa可以達到和

使用DynaBERT訓練策略壓縮TinyBERT

下面我們將使用DynaBERT訓練策略中寬度自適應部分對TinyBERT在GLUE基準數據集的QQP任務中進行蒸餾,驗證實際效果。

實驗環境

本實驗使用aistudio至尊版GPU,cuda版本為10.1,具體依賴如下:

- paddeslim使用develop版本

- paddlenlp==2.0.0rc0

- paddlepaddle-gpu==2.0.0.post101

# 配置實驗環境

# cuda 10.1

!pip install paddlenlp==2.0.0rc0

!python -m pip install paddlepaddle-gpu==2.0.0.post101 -f https://paddlepaddle.org.cn/whl/mkl/stable.html

!pip install regexLooking in indexes: https://mirror.baidu.com/pypi/simple/

Collecting paddlenlp==2.0.0rc0

[?25l Downloading https://mirror.baidu.com/pypi/packages/d0/91/fd5fb57e7b931d83f7b944c55eb5826f6331c6eb5a55d1dafeb81d8e0989/paddlenlp-2.0.0rc0-py3-none-any.whl (177kB)

[K |████████████████████████████████| 184kB 18.9MB/s eta 0:00:01

[?25hRequirement already satisfied: jieba in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (0.42.1)

Requirement already satisfied: visualdl in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (2.1.1)

Requirement already satisfied: seqeval in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (1.2.2)

Requirement already satisfied: h5py in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (2.9.0)

Requirement already satisfied: colorlog in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (4.1.0)

Requirement already satisfied: colorama in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp==2.0.0rc0) (0.4.4)

Requirement already satisfied: flake8>=3.7.9 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (3.8.2)

Requirement already satisfied: requests in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (2.22.0)

Requirement already satisfied: numpy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (1.20.3)

Requirement already satisfied: six>=1.14.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (1.15.0)

Requirement already satisfied: pre-commit in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (1.21.0)

Requirement already satisfied: flask>=1.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (1.1.1)

Requirement already satisfied: Pillow>=7.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (7.1.2)

Requirement already satisfied: Flask-Babel>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (1.0.0)

Requirement already satisfied: bce-python-sdk in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (0.8.53)

Requirement already satisfied: shellcheck-py in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (0.7.1.1)

Requirement already satisfied: protobuf>=3.11.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp==2.0.0rc0) (3.14.0)

Requirement already satisfied: scikit-learn>=0.21.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from seqeval->paddlenlp==2.0.0rc0) (0.24.2)

Requirement already satisfied: pyflakes<2.3.0,>=2.2.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (2.2.0)

Requirement already satisfied: importlib-metadata; python_version < "3.8" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (0.23)

Requirement already satisfied: mccabe<0.7.0,>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (0.6.1)

Requirement already satisfied: pycodestyle<2.7.0,>=2.6.0a1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (2.6.0)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl->paddlenlp==2.0.0rc0) (2019.9.11)

Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl->paddlenlp==2.0.0rc0) (3.0.4)

Requirement already satisfied: idna<2.9,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl->paddlenlp==2.0.0rc0) (2.8)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->visualdl->paddlenlp==2.0.0rc0) (1.25.6)

Requirement already satisfied: nodeenv>=0.11.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (1.3.4)

Requirement already satisfied: aspy.yaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (1.3.0)

Requirement already satisfied: identify>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (1.4.10)

Requirement already satisfied: pyyaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (5.1.2)

Requirement already satisfied: cfgv>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (2.0.1)

Requirement already satisfied: virtualenv>=15.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (16.7.9)

Requirement already satisfied: toml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->visualdl->paddlenlp==2.0.0rc0) (0.10.0)

Requirement already satisfied: itsdangerous>=0.24 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp==2.0.0rc0) (1.1.0)

Requirement already satisfied: Werkzeug>=0.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp==2.0.0rc0) (0.16.0)

Requirement already satisfied: Jinja2>=2.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp==2.0.0rc0) (2.10.1)

Requirement already satisfied: click>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp==2.0.0rc0) (7.0)

Requirement already satisfied: pytz in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddlenlp==2.0.0rc0) (2019.3)

Requirement already satisfied: Babel>=2.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddlenlp==2.0.0rc0) (2.8.0)

Requirement already satisfied: future>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddlenlp==2.0.0rc0) (0.18.0)

Requirement already satisfied: pycryptodome>=3.8.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddlenlp==2.0.0rc0) (3.9.9)

Requirement already satisfied: scipy>=0.19.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp==2.0.0rc0) (1.6.3)

Requirement already satisfied: joblib>=0.11 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp==2.0.0rc0) (0.14.1)

Requirement already satisfied: threadpoolctl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp==2.0.0rc0) (2.1.0)

Requirement already satisfied: zipp>=0.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from importlib-metadata; python_version < "3.8"->flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (0.6.0)

Requirement already satisfied: MarkupSafe>=0.23 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Jinja2>=2.10.1->flask>=1.1.1->visualdl->paddlenlp==2.0.0rc0) (1.1.1)

Requirement already satisfied: more-itertools in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from zipp>=0.5->importlib-metadata; python_version < "3.8"->flake8>=3.7.9->visualdl->paddlenlp==2.0.0rc0) (7.2.0)

[31mERROR: paddlehub 2.0.4 has requirement paddlenlp>=2.0.0rc5, but you'll have paddlenlp 2.0.0rc0 which is incompatible.[0m

Installing collected packages: paddlenlp

Found existing installation: paddlenlp 2.0.1

Uninstalling paddlenlp-2.0.1:

Successfully uninstalled paddlenlp-2.0.1

Successfully installed paddlenlp-2.0.0rc0

Looking in indexes: https://mirror.baidu.com/pypi/simple/

Looking in links: https://paddlepaddle.org.cn/whl/mkl/stable.html

Collecting paddlepaddle-gpu==2.0.0.post101

[?25l Downloading https://paddle-wheel.bj.bcebos.com/2.0.0-gpu-cuda10.1-cudnn7-mkl_gcc8.2/paddlepaddle_gpu-2.0.0.post101-cp37-cp37m-linux_x86_64.whl (678.2MB)

[K |████████████████████████████████| 678.2MB 43kB/s s eta 0:00:011 |▏ | 3.8MB 1.8MB/s eta 0:06:19 |█ | 22.8MB 1.8MB/s eta 0:06:08 |███████████████████████ | 488.9MB 26.4MB/s eta 0:00:08 |███████████████████████████████▎| 664.0MB 62.1MB/s eta 0:00:01

[?25hRequirement already satisfied: astor in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (0.8.1)

Requirement already satisfied: numpy>=1.13; python_version >= "3.5" and platform_system != "Windows" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (1.20.3)

Requirement already satisfied: Pillow in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (7.1.2)

Requirement already satisfied: decorator in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (4.4.2)

Requirement already satisfied: gast==0.3.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (0.3.3)

Requirement already satisfied: six in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (1.15.0)

Requirement already satisfied: protobuf>=3.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (3.14.0)

Requirement already satisfied: requests>=2.20.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlepaddle-gpu==2.0.0.post101) (2.22.0)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu==2.0.0.post101) (1.25.6)

Requirement already satisfied: idna<2.9,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu==2.0.0.post101) (2.8)

Requirement already satisfied: chardet<3.1.0,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu==2.0.0.post101) (3.0.4)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.20.0->paddlepaddle-gpu==2.0.0.post101) (2019.9.11)

Installing collected packages: paddlepaddle-gpu

Found existing installation: paddlepaddle-gpu 2.1.0.post101

Uninstalling paddlepaddle-gpu-2.1.0.post101:

Successfully uninstalled paddlepaddle-gpu-2.1.0.post101

Successfully installed paddlepaddle-gpu-2.0.0.post101

Looking in indexes: https://mirror.baidu.com/pypi/simple/

Collecting regex

[?25l Downloading https://mirror.baidu.com/pypi/packages/b5/75/fdbf7f0156d8d6181e316cd7d2da7bdeebd66858cc6663c751c41dd99d64/regex-2021.4.4-cp37-cp37m-manylinux2010_x86_64.whl (665kB)

[K |████████████████████████████████| 665kB 13.1MB/s eta 0:00:01

[?25hInstalling collected packages: regex

Successfully installed regex-2021.4.4# 解壓paddleslim和與訓練模型

# 僅在第一次運行代碼時使用

!tar -xf PaddleSlim-develop.tar

!tar -xf pretrained_model.tar

mv ./qqp_pretrained_model ./PaddleSlim-develop/demo/ofa/bert/cd PaddleSlim-develop//home/aistudio/PaddleSlim-developimport os

import time

import json

import random

import numpy as np

import paddle

import paddle.nn.functional as F

import paddlenlp.datasets as datasets

from functools import partial

from paddle.io import DataLoader

from paddlenlp.data import Stack, Tuple, Pad

from paddleslim.nas.ofa.utils import nlp_utils

from paddleslim.nas.ofa import OFA, DistillConfig, utils

from paddleslim.nas.ofa.convert_super import Convert, supernet

from paddle.metric import Metric, Accuracy, Precision, Recall

from paddlenlp.metrics import AccuracyAndF1, Mcc, PearsonAndSpearman

from paddlenlp.transformers import BertModel, BertForSequenceClassification, BertTokenizer/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:26: DeprecationWarning: `np.int` is a deprecated alias for the builtin `int`. To silence this warning, use `int` by itself. Doing this will not modify any behavior and is safe. When replacing `np.int`, you may wish to use e.g. `np.int64` or `np.int32` to specify the precision. If you wish to review your current use, check the release note link for additional information.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

def convert_to_list(value, n, name, dtype=np.int):

[06-28 20:13:20 MainThread @utils.py:79] WRN paddlepaddle version: 2.0.0. The dynamic graph version of PARL is under development, not fully tested and supported

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/parl/remote/communication.py:38: DeprecationWarning: 'pyarrow.default_serialization_context' is deprecated as of 2.0.0 and will be removed in a future version. Use pickle or the pyarrow IPC functionality instead.

context = pyarrow.default_serialization_context()

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pyarrow/pandas_compat.py:1027: DeprecationWarning: `np.float` is a deprecated alias for the builtin `float`. To silence this warning, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

'floating': np.float,

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/__init__.py:107: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import MutableMapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/rcsetup.py:20: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Iterable, Mapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/colors.py:53: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import SizedTASK_CLASSES = {

"qqp": (datasets.GlueQQP, AccuracyAndF1),

}

MODEL_CLASSES = {"bert": (BertForSequenceClassification, BertTokenizer), }def set_seed(args):

random.seed(args['seed'] + paddle.distributed.get_rank())

np.random.seed(args['seed'] + paddle.distributed.get_rank())

paddle.seed(args['seed'] + paddle.distributed.get_rank())數據集介紹

自然語言處理(NLP)主要包括自然語言理解(NLU)和自然語言生成(NLG)。為了推動通用和強大的自然語言理解系統的開發和研究,紐約大學、華盛頓大學和DeepMind聯合創建了一個用於模型評估、比較和分析多個自然語言理解任務的在線平台,也就是GLUE(General Language Understanding Evaluation)。GLUE包括九項任務,分別是CoLA、SST-2、MRPC、STS-B、QQP、MNLI、QNLI、RTE和WNLI。它們可被分為三類,分別是單句分類任務、相似性和釋義性任務和推理任務。

QQP

Quora Question Pairs2(QQP)數據集是來自社區問答網站Quora的問題對集合,任務是判斷一對問題對是否等效,是一個二分類問題,結果是等效或不等效兩種。QQP中的類別分佈不平衡,其中63%是負樣本,37%是正樣本,衡量指標為準確率和F1值。

QQP數據集示列:

[‘What is the best self help book you have read? Why? How did it change your life?’, ‘What are the top self help books I should read?’, ‘1’]

其中前兩個句子為用來判斷是否等效的句子,標籤為1代表兩個句子等效。

數據預處理

- convert_example: 將QQP中的數據轉化為可被模型處理的features

def convert_example(example,

tokenizer,

label_list,

max_seq_length=512,

is_test=False):

"""convert a glue example into necessary features"""

def _truncate_seqs(seqs, max_seq_length):

if len(seqs) == 1: # single sentence

# Account for [CLS] and [SEP] with "- 2"

seqs[0] = seqs[0][0:(max_seq_length - 2)]

else: # sentence pair

# Account for [CLS], [SEP], [SEP] with "- 3"

tokens_a, tokens_b = seqs

max_seq_length -= 3

while True: # truncate with longest_first strategy

total_length = len(tokens_a) + len(tokens_b)

if total_length <= max_seq_length:

break

if len(tokens_a) > len(tokens_b):

tokens_a.pop()

else:

tokens_b.pop()

return seqs

def _concat_seqs(seqs, separators, seq_mask=0, separator_mask=1):

concat = sum((seq + sep for sep, seq in zip(separators, seqs)), [])

segment_ids = sum(([i] * (len(seq) + len(sep)) for i, (sep, seq) in

enumerate(zip(separators, seqs))), [])

if isinstance(seq_mask, int):

seq_mask = [[seq_mask] * len(seq) for seq in seqs]

if isinstance(separator_mask, int):

separator_mask = [[separator_mask] * len(sep) for sep in separators]

p_mask = sum((s_mask + mask for sep, seq, s_mask, mask in

zip(separators, seqs, seq_mask, separator_mask)), [])

return concat, segment_ids, p_mask

if not is_test:

# `label_list == None` is for regression task

label_dtype = "int64" if label_list else "float32"

# get the label

label = example[-1]

example = example[:-1]

#create label maps if classification task

if label_list:

label_map = {}

for (i, l) in enumerate(label_list):

label_map[l] = i

label = label_map[label]

label = np.array([label], dtype=label_dtype)

# tokenize raw text

tokens_raw = [tokenizer(l) for l in example]

# truncate to the truncate_length,

tokens_trun = _truncate_seqs(tokens_raw, max_seq_length)

# concate the sequences with special tokens

tokens_trun[0] = [tokenizer.cls_token] + tokens_trun[0]

tokens, segment_ids, _ = _concat_seqs(tokens_trun, [[tokenizer.sep_token]] *

len(tokens_trun))

# convert the token to ids

input_ids = tokenizer.convert_tokens_to_ids(tokens)

valid_length = len(input_ids)

# The mask has 1 for real tokens and 0 for padding tokens. Only real

# tokens are attended to.

# input_mask = [1] * len(input_ids)

if not is_test:

return input_ids, segment_ids, valid_length, label

else:

return input_ids, segment_ids, valid_length以QQP train dataset中第七條數據為例,對每一步數據處理的結果進行展示。

代碼:

train_ds = dataset_class.get_datasets(['train'])

exp = train_ds[7]

print('QQP train example: ', exp)

tokens_raw = [tokenizer(l) for l in exp]

print('tokens of this example: ', tokens_raw)

tokens_trun = _truncate_seqs(tokens_raw[:2], 128)

tokens_trun[0] = [tokenizer.cls_token] + tokens_trun[0]

print('tokens after _truncate_seqs: ', tokens_trun)

tokens, segment_ids, pmask = _concat_seqs(tokens_trun, [[tokenizer.sep_token]] * len(tokens_trun))

print('tokens after _concat_seqs: ', tokens)

print('segments_ids: ', segment_ids)

print('pmask: ', pmask)

input_ids = tokenizer.convert_tokens_to_ids(tokens)

print('input ids: ', input_ids)

valid_length = len(input_ids)

print('input ids length: ', valid_length)對應的print結果:

QQP train example: ['What is the best self help book you have read? Why? How did it change your life?', 'What are the top self help books I should read?', '1']

tokens of this example: [['what', 'is', 'the', 'best', 'self', 'help', 'book', 'you', 'have', 'read', '?', 'why', '?', 'how', 'did', 'it', 'change', 'your', 'life', '?'], ['what', 'are', 'the', 'top', 'self', 'help', 'books', 'i', 'should', 'read', '?'], ['1']]

tokens after _truncate_seqs: [['[CLS]', 'what', 'is', 'the', 'best', 'self', 'help', 'book', 'you', 'have', 'read', '?', 'why', '?', 'how', 'did', 'it', 'change', 'your', 'life', '?'], ['what', 'are', 'the', 'top', 'self', 'help', 'books', 'i', 'should', 'read', '?']]

tokens after _concat_seqs: ['[CLS]', 'what', 'is', 'the', 'best', 'self', 'help', 'book', 'you', 'have', 'read', '?', 'why', '?', 'how', 'did', 'it', 'change', 'your', 'life', '?', '[SEP]', 'what', 'are', 'the', 'top', 'self', 'help', 'books', 'i', 'should', 'read', '?', '[SEP]']

segments_ids: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

pmask: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1]

input ids: [101, 2054, 2003, 1996, 2190, 2969, 2393, 2338, 2017, 2031, 3191, 1029, 2339, 1029, 2129, 2106, 2009, 2689, 2115, 2166, 1029, 102, 2054, 2024, 1996, 2327, 2969, 2393, 2808, 1045, 2323, 3191, 1029, 102]

input ids length: 34模型構建

### reorder weights according head importance and neuron importance

def reorder_neuron_head(model, head_importance, neuron_importance):

# reorder heads and ffn neurons

for layer, current_importance in enumerate(neuron_importance):

# reorder heads

idx = paddle.argsort(head_importance[layer], descending=True)

nlp_utils.reorder_head(model.bert.encoder.layers[layer].self_attn, idx)

# reorder neurons

idx = paddle.argsort(

paddle.to_tensor(current_importance), descending=True)

nlp_utils.reorder_neuron(

model.bert.encoder.layers[layer].linear1.fn, idx, dim=1)

nlp_utils.reorder_neuron(

model.bert.encoder.layers[layer].linear2.fn, idx, dim=0)def soft_cross_entropy(inp, target):

inp_likelihood = F.log_softmax(inp, axis=-1)

target_prob = F.softmax(target, axis=-1)

return -1. * paddle.mean(paddle.sum(inp_likelihood * target_prob, axis=-1))def evaluate(model, criterion, metric, data_loader, epoch, step,

width_mult=1.0):

with paddle.no_grad():

model.eval()

metric.reset()

for batch in data_loader:

input_ids, segment_ids, labels = batch

logits = model(input_ids, segment_ids, attention_mask=[None, None])

if isinstance(logits, tuple):

logits = logits[0]

loss = criterion(logits, labels)

correct = metric.compute(logits, labels)

metric.update(correct)

results = metric.accumulate()

print("epoch: %d, batch: %d, width_mult: %s, eval loss: %f, %s: %s\n" %

(epoch, step, 'teacher' if width_mult == 100 else str(width_mult),

loss.numpy(), metric.name(), results))

model.train()### monkey patch for bert forward to accept [attention_mask, head_mask] as attention_mask

def bert_forward(self,

input_ids,

token_type_ids=None,

position_ids=None,

attention_mask=[None, None]):

wtype = self.pooler.dense.fn.weight.dtype if hasattr(

self.pooler.dense, 'fn') else self.pooler.dense.weight.dtype

if attention_mask[0] is None:

attention_mask[0] = paddle.unsqueeze(

(input_ids == self.pad_token_id).astype(wtype) * -1e9, axis=[1, 2])

embedding_output = self.embeddings(

input_ids=input_ids,

position_ids=position_ids,

token_type_ids=token_type_ids)

encoder_outputs = self.encoder(embedding_output, attention_mask)

sequence_output = encoder_outputs

pooled_output = self.pooler(sequence_output)

return sequence_output, pooled_outputBertModel.forward = bert_forwarddef print_arguments(args):

"""print arguments"""

print('----------- Configuration Arguments -----------')

for arg, value in sorted(args.items()):

print('%s: %s' % (arg, value))

print('------------------------------------------------')args = {'task_name': 'QQP',

'model_type': 'bert',

'model_name_or_path': './demo/ofa/bert/qqp_pretrained_model/',

'seed': 42,

'n_gpu': 1,

'max_seq_length': 128,

'batch_size': 32,

'learning_rate': 2e-5,

'num_train_epochs': 3,

'warmup_steps': 0,

'max_steps': -1,

'adam_epsilon': 1e-8,

'weight_decay': 0.0,

'lambda_logit': 1.0,

'logging_steps': 10,

'output_dir': './demo/ofa/bert/tmp/QQP/',

'save_steps': 500,

'width_mult_list': [1.0, 0.8333333333333334, 0.6666666666666666, 0.5],

}

print_arguments(args)----------- Configuration Arguments -----------

adam_epsilon: 1e-08

batch_size: 32

lambda_logit: 1.0

learning_rate: 2e-05

logging_steps: 10

max_seq_length: 128

max_steps: -1

model_name_or_path: ./demo/ofa/bert/qqp_pretrained_model/

model_type: bert

n_gpu: 1

num_train_epochs: 3

output_dir: ./demo/ofa/bert/tmp/QQP/

save_steps: 500

seed: 42

task_name: QQP

warmup_steps: 0

weight_decay: 0.0

width_mult_list: [1.0, 0.8333333333333334, 0.6666666666666666, 0.5]

------------------------------------------------paddle.set_device("gpu")

if paddle.distributed.get_world_size() > 1:

paddle.distributed.init_parallel_env()

set_seed(args)

args['task_name'] = args['task_name'].lower()

dataset_class, metric_class = TASK_CLASSES[args['task_name']]

model_class, tokenizer_class = MODEL_CLASSES[args['model_type']]

train_ds = dataset_class.get_datasets(['train'])

tokenizer = tokenizer_class.from_pretrained(args['model_name_or_path'])

# Constructs a BERT tokenizer. It uses a basic tokenizer to do punctuation splitting, lower casing and so on,

# and follows a WordPiece tokenizer to tokenize as subwords.

trans_func = partial(

convert_example,

tokenizer=tokenizer,

label_list=train_ds.get_labels(),

max_seq_length=args['max_seq_length'])

train_ds = train_ds.apply(trans_func, lazy=True)

train_batch_sampler = paddle.io.DistributedBatchSampler(

train_ds, batch_size=args['batch_size'], shuffle=True)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

Pad(axis=0, pad_val=tokenizer.pad_token_id), # segment

Stack(), # length

Stack(dtype="int64" if train_ds.get_labels() else "float32") # label

): [data for i, data in enumerate(fn(samples)) if i != 2]

train_data_loader = DataLoader(

dataset=train_ds,

batch_sampler=train_batch_sampler,

collate_fn=batchify_fn,

num_workers=0,

return_list=True)

dev_dataset = dataset_class.get_datasets(["dev"])

dev_dataset = dev_dataset.apply(trans_func, lazy=True)

dev_batch_sampler = paddle.io.BatchSampler(

dev_dataset, batch_size=args['batch_size'], shuffle=False)

dev_data_loader = DataLoader(

dataset=dev_dataset,

batch_sampler=dev_batch_sampler,

collate_fn=batchify_fn,

num_workers=0,

return_list=True)

num_labels = 1 if train_ds.get_labels() == None else len(

train_ds.get_labels())

model = model_class.from_pretrained(

args['model_name_or_path'], num_classes=num_labels)

if paddle.distributed.get_world_size() > 1:

model = paddle.DataParallel(model)100%|██████████| 40719/40719 [00:01<00:00, 26854.93it/s]模型訓練

# Step1: Initialize a dictionary to save the weights from the origin BERT model.

origin_weights = {}

for name, param in model.named_parameters():

origin_weights[name] = param

# Step2: Convert origin model to supernet.

sp_config = supernet(expand_ratio=args['width_mult_list'])

model = Convert(sp_config).convert(model)

# Use weights saved in the dictionary to initialize supernet.

utils.set_state_dict(model, origin_weights)

del origin_weights

# Step3: Define teacher model.

teacher_model = model_class.from_pretrained(

args['model_name_or_path'], num_classes=num_labels)

# Step4: Config about distillation.

mapping_layers = ['bert.embeddings']

for idx in range(model.bert.config['num_hidden_layers']):

mapping_layers.append('bert.encoder.layers.{}'.format(idx))

default_distill_config = {

'lambda_distill': 0.1, # 蒸餾loss的縮放比例

'teacher_model': teacher_model,

'mapping_layers': mapping_layers,

}

distill_config = DistillConfig(**default_distill_config)

# Step5: Config in supernet training.

ofa_model = OFA(model,

distill_config=distill_config,

elastic_order=['width'])

criterion = paddle.nn.loss.CrossEntropyLoss() if train_ds.get_labels(

) else paddle.nn.loss.MSELoss()

metric = metric_class()

# Step6: Calculate the importance of neurons and head,

# and then reorder them according to the importance.

head_importance, neuron_importance = nlp_utils.compute_neuron_head_importance(

args['task_name'],

ofa_model.model,

dev_data_loader,

loss_fct=criterion,

num_layers=model.bert.config['num_hidden_layers'],

num_heads=model.bert.config['num_attention_heads'])

reorder_neuron_head(ofa_model.model, head_importance, neuron_importance)

lr_scheduler = paddle.optimizer.lr.LambdaDecay(

args['learning_rate'],

lambda current_step, num_warmup_steps=args['warmup_steps'],

num_training_steps=args['max_steps'] if args['max_steps'] > 0 else

(len(train_data_loader) * args['num_train_epochs']): float(

current_step) / float(max(1, num_warmup_steps))

if current_step < num_warmup_steps else max(

0.0,

float(num_training_steps - current_step) / float(

max(1, num_training_steps - num_warmup_steps))))

optimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

epsilon=args['adam_epsilon'],

parameters=ofa_model.model.parameters(),

weight_decay=args['weight_decay'],

apply_decay_param_fun=lambda x: x in [

p.name for n, p in ofa_model.model.named_parameters()

if not any(nd in n for nd in ["bias", "norm"])

])

global_step = 0

tic_train = time.time()

for epoch in range(args['num_train_epochs']):

# Step7: Set current epoch and task.

ofa_model.set_epoch(epoch)

ofa_model.set_task('width')

for step, batch in enumerate(train_data_loader):

global_step += 1

input_ids, segment_ids, labels = batch

for width_mult in args['width_mult_list']:

# Step8: Broadcast supernet config from width_mult,

# and use this config in supernet training.

net_config = utils.dynabert_config(ofa_model, width_mult)

ofa_model.set_net_config(net_config)

logits, teacher_logits = ofa_model(

input_ids, segment_ids, attention_mask=[None, None])

rep_loss = ofa_model.calc_distill_loss()

logit_loss = soft_cross_entropy(logits,

teacher_logits.detach())

loss = rep_loss + args['lambda_logit'] * logit_loss

loss.backward()

optimizer.step()

lr_scheduler.step()

ofa_model.model.clear_gradients()

if global_step % args['logging_steps'] == 0:

if (not args['n_gpu'] > 1) or paddle.distributed.get_rank() == 0:

print(

"global step %d, epoch: %d, batch: %d, loss: %f, speed: %.2f step/s"

% (global_step, epoch, step, loss,

args['logging_steps'] / (time.time() - tic_train)))

tic_train = time.time()

if global_step % args['save_steps'] == 0:

tic_eval = time.time()

evaluate(

teacher_model,

criterion,

metric,

dev_data_loader,

epoch,

step,

width_mult=100)

print("eval done total : %s s" %

(time.time() - tic_eval))

for idx, width_mult in enumerate(args['width_mult_list']):

net_config = utils.dynabert_config(ofa_model, width_mult)

ofa_model.set_net_config(net_config)

tic_eval = time.time()

acc = evaluate(ofa_model, criterion, metric,

dev_data_loader, epoch, step, width_mult)

print("eval done total : %s s" %

(time.time() - tic_eval))

if (not args['n_gpu'] > 1

) or paddle.distributed.get_rank() == 0:

output_dir = os.path.join(args['output_dir'],

"model_%d" % global_step)

if not os.path.exists(output_dir):

os.makedirs(output_dir)

# need better way to get inner model of DataParallel

model_to_save = model._layers if isinstance(

model, paddle.DataParallel) else model

model_to_save.save_pretrained(output_dir)

tokenizer.save_pretrained(output_dir)/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dataloader/dataloader_iter.py:354: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

if arr.dtype == np.object:

global step 34110, epoch: 2, batch: 11367, loss: 0.148229, speed: 5.31 step/s訓練結果:best model 32500

|

Width

|

Accuracy

|

F1

|

|

Teacher

|

0.9047

|

0.8751

|

|

1

|

0.9069

|

0.8776

|

|

0.83334

|

0.9055

|

0.8756

|

|

0.66667

|

0.8992

|

0.8678

|

|

0.5

|

0.8967

|

0.8645

|

|

Task

|

Metric

|

TinyBERT(L=4, D=312)

|

Result with OFA

|

|

QQP

|

Accuracy/F1

|

0.9047/0.8751

|

0.9021/0.8714

|

導出子模型

注意:運行下面一段代碼時需重啓環境

import os

import random

import time

import json

from functools import partial

import numpy as np

import paddle

import paddle.nn as nn

import paddle.nn.functional as F

from paddlenlp.transformers import BertModel, BertForSequenceClassification, BertTokenizer

from paddlenlp.utils.log import logger

from paddleslim.nas.ofa import OFA, utils

from paddleslim.nas.ofa.convert_super import Convert, supernet

from paddleslim.nas.ofa.layers import BaseBlock

MODEL_CLASSES = {"bert": (BertForSequenceClassification, BertTokenizer), }/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:26: DeprecationWarning: `np.int` is a deprecated alias for the builtin `int`. To silence this warning, use `int` by itself. Doing this will not modify any behavior and is safe. When replacing `np.int`, you may wish to use e.g. `np.int64` or `np.int32` to specify the precision. If you wish to review your current use, check the release note link for additional information.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

def convert_to_list(value, n, name, dtype=np.int):

[06-29 11:11:07 MainThread @utils.py:79] WRN paddlepaddle version: 2.0.0. The dynamic graph version of PARL is under development, not fully tested and supported

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/parl/remote/communication.py:38: DeprecationWarning: 'pyarrow.default_serialization_context' is deprecated as of 2.0.0 and will be removed in a future version. Use pickle or the pyarrow IPC functionality instead.

context = pyarrow.default_serialization_context()

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pyarrow/pandas_compat.py:1027: DeprecationWarning: `np.float` is a deprecated alias for the builtin `float`. To silence this warning, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

'floating': np.float,

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/__init__.py:107: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import MutableMapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/rcsetup.py:20: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Iterable, Mapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/colors.py:53: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Sizeddef export_static_model(model, model_path, max_seq_length):

input_shape = [

paddle.static.InputSpec(

shape=[None, max_seq_length], dtype='int64'),

paddle.static.InputSpec(

shape=[None, max_seq_length], dtype='int64')

]

net = paddle.jit.to_static(model, input_spec=input_shape)

paddle.jit.save(net, model_path)def do_train(args):

paddle.set_device("gpu")

model_class, tokenizer_class = MODEL_CLASSES[args['model_type']]

config_path = os.path.join(args['model_name_or_path'], 'model_config.json')

cfg_dict = dict(json.loads(open(config_path).read()))

num_labels = cfg_dict['num_classes']

model = model_class.from_pretrained(

args['model_name_or_path'], num_classes=num_labels)

origin_model = model_class.from_pretrained(

args['model_name_or_path'], num_classes=num_labels)

sp_config = supernet(expand_ratio=[1.0, args['width_mult']])

model = Convert(sp_config).convert(model)

ofa_model = OFA(model)

sd = paddle.load(

os.path.join(args['model_name_or_path'], 'model_state.pdparams'))

ofa_model.model.set_state_dict(sd)

best_config = utils.dynabert_config(ofa_model, args['width_mult'])

ofa_model.export(

best_config,

input_shapes=[[1, args['max_seq_length']], [1, args['max_seq_length']]],

input_dtypes=['int64', 'int64'],

origin_model=origin_model)

for name, sublayer in origin_model.named_sublayers():

if isinstance(sublayer, paddle.nn.MultiHeadAttention):

sublayer.num_heads = int(args['width_mult'] * sublayer.num_heads)

output_dir = os.path.join(args['sub_model_output_dir'],

"model_width_%.5f" % args['width_mult'])

if not os.path.exists(output_dir):

os.makedirs(output_dir)

model_to_save = origin_model

model_to_save.save_pretrained(output_dir)

if args['static_sub_model'] != None:

export_static_model(origin_model, args['static_sub_model'],

args['max_seq_length'])sub_args = {'model_name_or_path': './demo/ofa/bert/tmp/QQP/model_32500/',

'max_seq_length': 128,

'sub_model_output_dir': './demo/ofa/bert/tmp/QQP/dynamic_model/',

'static_sub_model': './demo/ofa/bert/tmp/QQP/static_model',

'width_mult':0.5}

args.update(sub_args)

print_arguments(args)----------- Configuration Arguments -----------

adam_epsilon: 1e-08

batch_size: 32

lambda_logit: 1.0

learning_rate: 2e-05

logging_steps: 10

max_seq_length: 128

max_steps: -1

model_name_or_path: ./demo/ofa/bert/tmp/QQP/model_32500/

model_type: bert

n_gpu: 1

num_train_epochs: 3

output_dir: ./demo/ofa/bert/tmp/QQP/

save_steps: 500

seed: 42

static_sub_model: ./demo/ofa/bert/tmp/QQP/static_model

sub_model_output_dir: ./demo/ofa/bert/tmp/QQP/dynamic_model/

task_name: QQP

warmup_steps: 0

weight_decay: 0.0

width_mult: 0.6666666666666666

width_mult_list: [1.0, 0.8333333333333334, 0.6666666666666666, 0.5]

------------------------------------------------do_train(args)/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.embeddings.word_embeddings.weight. bert.embeddings.word_embeddings.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.embeddings.position_embeddings.weight. bert.embeddings.position_embeddings.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.embeddings.token_type_embeddings.weight. bert.embeddings.token_type_embeddings.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.q_proj.weight. bert.encoder.layers.0.self_attn.q_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.q_proj.bias. bert.encoder.layers.0.self_attn.q_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.k_proj.weight. bert.encoder.layers.0.self_attn.k_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.k_proj.bias. bert.encoder.layers.0.self_attn.k_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.v_proj.weight. bert.encoder.layers.0.self_attn.v_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.v_proj.bias. bert.encoder.layers.0.self_attn.v_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.out_proj.weight. bert.encoder.layers.0.self_attn.out_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.self_attn.out_proj.bias. bert.encoder.layers.0.self_attn.out_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.linear1.weight. bert.encoder.layers.0.linear1.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.linear1.bias. bert.encoder.layers.0.linear1.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.linear2.weight. bert.encoder.layers.0.linear2.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.0.linear2.bias. bert.encoder.layers.0.linear2.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.q_proj.weight. bert.encoder.layers.1.self_attn.q_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.q_proj.bias. bert.encoder.layers.1.self_attn.q_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.k_proj.weight. bert.encoder.layers.1.self_attn.k_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.k_proj.bias. bert.encoder.layers.1.self_attn.k_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.v_proj.weight. bert.encoder.layers.1.self_attn.v_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.v_proj.bias. bert.encoder.layers.1.self_attn.v_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.out_proj.weight. bert.encoder.layers.1.self_attn.out_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.self_attn.out_proj.bias. bert.encoder.layers.1.self_attn.out_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.linear1.weight. bert.encoder.layers.1.linear1.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.linear1.bias. bert.encoder.layers.1.linear1.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.linear2.weight. bert.encoder.layers.1.linear2.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.1.linear2.bias. bert.encoder.layers.1.linear2.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.q_proj.weight. bert.encoder.layers.2.self_attn.q_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.q_proj.bias. bert.encoder.layers.2.self_attn.q_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.k_proj.weight. bert.encoder.layers.2.self_attn.k_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.k_proj.bias. bert.encoder.layers.2.self_attn.k_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.v_proj.weight. bert.encoder.layers.2.self_attn.v_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.v_proj.bias. bert.encoder.layers.2.self_attn.v_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.out_proj.weight. bert.encoder.layers.2.self_attn.out_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.self_attn.out_proj.bias. bert.encoder.layers.2.self_attn.out_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.linear1.weight. bert.encoder.layers.2.linear1.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.linear1.bias. bert.encoder.layers.2.linear1.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.linear2.weight. bert.encoder.layers.2.linear2.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.2.linear2.bias. bert.encoder.layers.2.linear2.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.q_proj.weight. bert.encoder.layers.3.self_attn.q_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.q_proj.bias. bert.encoder.layers.3.self_attn.q_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.k_proj.weight. bert.encoder.layers.3.self_attn.k_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.k_proj.bias. bert.encoder.layers.3.self_attn.k_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.v_proj.weight. bert.encoder.layers.3.self_attn.v_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.v_proj.bias. bert.encoder.layers.3.self_attn.v_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.out_proj.weight. bert.encoder.layers.3.self_attn.out_proj.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.self_attn.out_proj.bias. bert.encoder.layers.3.self_attn.out_proj.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.linear1.weight. bert.encoder.layers.3.linear1.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.linear1.bias. bert.encoder.layers.3.linear1.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.linear2.weight. bert.encoder.layers.3.linear2.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.encoder.layers.3.linear2.bias. bert.encoder.layers.3.linear2.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.pooler.dense.weight. bert.pooler.dense.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for bert.pooler.dense.bias. bert.pooler.dense.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for classifier.weight. classifier.weight is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py:1263: UserWarning: Skip loading for classifier.bias. classifier.bias is not found in the provided dict.

warnings.warn(("Skip loading for {}. ".format(key) + str(err)))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/framework.py:686: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

elif dtype == np.bool:

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:298: UserWarning: /tmp/tmpwp7d2gg9.py:8

The behavior of expression A - B has been unified with elementwise_sub(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_sub(X, Y, axis=0) instead of A - B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:298: UserWarning: /tmp/tmpwp7d2gg9.py:33

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:298: UserWarning: /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/transformer.py:378

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:298: UserWarning: /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/transformer.py:527

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:298: UserWarning: /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/transformer.py:535

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))可以看到導出的模型由84.6M降低至72.7M,模型參數大小減小