概述

Java 支持好幾種文件讀取方法,本文要説的是小眾的 mmap(MappedByteBuffer) 以及它與 Safepoint、JVM 服務卡頓之間的關係。本文嘗試用 eBPF 等分析方法,去證明具體環境下,問題的存在與否。

審誤和發佈本文時,我才是二陽後活過來數小時而已,寫了數週的文章實在不想再拖延發布了。如文章有錯漏,還請多多包涵和指正。

引

Java 服務卡頓,是 Java 世界永恆的話題之王。想到 Java 卡頓,大部分人的第一反應是以下關鍵詞:

- GC

- Safepoint / Stop the world(STW)

而説訪問 mmap(MappedByteBuffer) 導致 Java 卡頓可能是個小眾話題。但如果你瞭解了一些基於 Java 的重 IO 的開源項目後,就會發現,這其實不是個小眾話題。如曾經大熱的 NoSQL 數據庫 Cassandra 默認就是重度使用 mmap 去讀取數據文件和寫 commit log 的。

基本原理

JVM 寫 GC 日誌時機分析

Safepoint

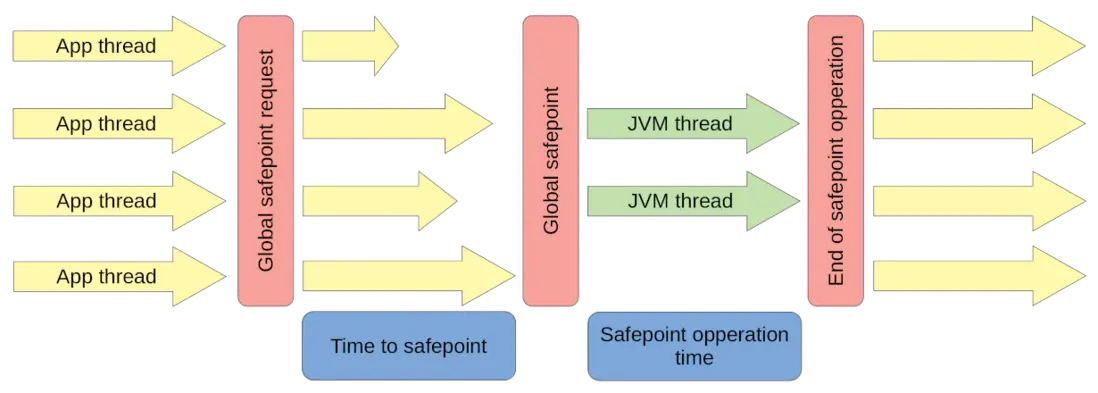

[From https://krzysztofslusarski.github.io/2022/12/12/async-manual....]

上圖大概説明了 safepoint 的主要流程。有興趣同學可以看看上面鏈接,或搜索一下,網上很多好文章説明過了。我就不搬門弄斧了。

一點需要注意的是,safepoint STOP THE WORLD(STW) 的使用者不只有 GC。還有其它。

這裏只簡單説明一下(我不是 JVM 專家,所以請謹慎使用以下觀點):

-

Global safepoint request

1.1 有一個線程提出了進入 safepoint 的請求,其中帶上

safepoint operation參數,參數其實是 STOP THE WORLD(STW) 後要執行的 Callback 操作 。可能是分配內存不足,觸發 GC。也可能是其它原因。1.2 一個叫 “

VM Thread” 的線程在收到 safepoint request 後,修改一個 JVM 全局的safepoint flag為 true(這裏只為簡單説明,如果你是行家,那麼這個 flag 其實是操作系統的內存缺頁標識) 。1.3 然後這個 “VM Thread” 就開始等待其它應用線程(App thread) 到達(進入) safepoint 。

1.4 其它應用線程(App thread)其實會高頻檢查這個 safepoint flag ,當發現為 true 時,就到達(進入) safepoint 狀態。

源碼鏈接 SafepointSynchronize::begin()

本文主要關注這裏的 “等待其它應用線程(App thread) 到達(進入) safepoint”。

-

Global safepoint

當 “

VM Thread” 發現所有 App thread 都到達 safepoint (真實的 STW 的開始) 。就開始執行safepoint operation。GC 操作是safepoint operation其中一種可能類型。源碼鏈接 RuntimeService::record_safepoint_synchronized()

-

End of safepoint operation

safepoint operation執行完畢, “VM Thread” 結束 STW 。源碼鏈接 SafepointSynchronize::end()

症狀

假設已經加入 java 命令參數:

-XX:+PrintGCApplicationStoppedTime

-XX:+PrintGCDetails

-XX:+PrintSafepointStatistics

-XX:PrintSafepointStatisticsCount=1

-XX:+SafepointTimeout

-XX:SafepointTimeoutDelay=<ms before timeout log is printed>GC log:

# 大部分時間,花在 Global safepoint request 與 Global safepoint 這間的“等待其它應用線程(App thread) 到達(進入) safepoint”

2023-09-07T13:19:50.029+0000: 58103.440: Total time for which application threads were stopped: 3.4971808 seconds, Stopping threads took: 3.3050644 seconds或 safepoint log:

[safepoint ] Application time: 0.1950250 seconds

[safepoint ] Entering safepoint region: RevokeBias

[safepoint ] Leaving safepoint region

[safepoint ] Total time for which application threads were stopped: 0.0003424 seconds, Stopping threads took: 0.0000491 seconds

///////

# SafepointSynchronize::begin: Timeout detected:

# SafepointSynchronize::begin: Timed out while spinning to reach a

# safepoint.

# SafepointSynchronize::begin: Threads which did not reach the

# safepoint:

# "SharedPool-Worker-5" #468 daemon prio=5 os_prio=0

# tid=0x00007f8785bb1f30 nid=0x4e14 runnable [0x0000000000000000]

java.lang.Thread.State: RUNNABLE

>> >

# SafepointSynchronize::begin: (End of list)

vmop [threads: total initially_running

wait_to_block] [time: spin block sync cleanup vmop]

page_trap_count

58099.941: G1IncCollectionPause [ 447 1

1 ] [ 3304 0 3305 1 190 ] 1

>>這個問題已經在 Cassandra 界有了個朵:

- JVM safepoints, mmap, and slow disks 或這裏

- Use of MappedByteBuffer could cause long STW due to TTSP (time to safepoint)

- Long unexplained time-to-safepoint (TTSP) in the JVM

那麼問題來了,怎麼證明你的環境的 java 應用存在或不存在這個問題?

診斷

環境準備

IO 卡頓的模擬方法見 [Linux 如何模擬 IO 卡頓] 一節。

生成測試文件:

sudo dd if=/dev/urandom of=/mnt/dm1/mapped.file bs=1G count=1

cp /mnt/dm1/mapped.file /tmp/mapped.file測試的目標 java 程序

// TestMMap.java

import java.util.*;

import java.io.IOException;

import java.io.RandomAccessFile;

import java.nio.MappedByteBuffer;

import java.nio.channels.FileChannel;

import java.util.concurrent.ThreadLocalRandom;

public class TestMMap {

public static void main(String[] arg) throws Exception {

TestGCLog l = new TestGCLog();

l.start();

TestMMap t = new TestMMap();

t.test(arg[0]);

}

void test(String mapfile) throws Exception {

long startTime = System.currentTimeMillis();

RandomAccessFile file = new RandomAccessFile(mapfile, "r");

FileChannel channel = file.getChannel();

// Read file into mapped buffer

MappedByteBuffer mbb =

channel.map(FileChannel.MapMode.READ_ONLY,

0, // position

channel.size());

long lastprint = System.currentTimeMillis();

while(true) {

// for (int i = 0; i < channel.size(); i++) {

long now = System.currentTimeMillis();

// System.out.print((char) mbb.get());

long p = ThreadLocalRandom.current().nextLong(0, channel.size() - 1);

mbb.position((int)p);

mbb.get();

if( System.currentTimeMillis() - now > 1 * 1000 ) {

System.out.println( new Date() + " - Read MMap: read slow: " + mbb.position() + "@" + mbb.limit() + " ," + (System.currentTimeMillis() - now) + "ms" );

}

else if( now - lastprint > 5 * 1000 ) {

System.out.println( new Date() + " - Read MMap:" + mbb.position() + "/" + mbb.limit() );

lastprint = now;

}

Thread.sleep(10);

// }

// mbb.position(0);

if(false)

break;

}

channel.close();

file.close();

System.out.println("Total read and print time: " + (System.currentTimeMillis() - startTime));

}

static class TestGCLog extends Thread {

List objList = new ArrayList();

byte[] m = new byte[100*1024];

public TestGCLog() {

super("TestGCLog");

}

@Override

public void run() {

try {

test();

} catch (Exception e) {

e.printStackTrace();

}

}

void test() throws Exception {

long lastprint = System.currentTimeMillis();

while(true) {

objList.clear();

long start = System.currentTimeMillis();

TestGCLog newObj = new TestGCLog();

long end = System.currentTimeMillis();

if( end - start > 10 ) {

System.out.println( new Date() + " - TestGCLog: slow new object: " + (end - start) + "ms" );

}

objList.add( newObj );

Thread.sleep(5);

long now = System.currentTimeMillis();

if( now - lastprint > 5 * 1000 ) {

System.out.println( new Date() + " - TestGCLog" );

lastprint = now;

}

}

}

}

}程序的主要邏輯是在 TestGCLog 線程中觸發 GC 。在 main 線程中隨機訪問 mmap 映射到內存中的文件,以觸發 page fault 和 IO 。

bpftrace 程序

#!/usr/local/bin/bpftrace

// trace-safepoint-mmap2.bt

// 程序假設環境中只有一個 java 進程

BEGIN

{}

uprobe:/usr/lib/jvm/java-17-openjdk-amd64/lib/server/libjvm.so:*SafepointSynchronize*begin*

{

// printf("java elf main. PID=%d", pid);

@java_pid[pid] = pid;

}

uprobe:/usr/lib/jvm/java-17-openjdk-amd64/lib/server/libjvm.so:*SafepointSynchronize*begin*

/@java_pid[pid]/

{

@sp_begin[pid, tid, comm, ustack] = count();

@vm_thread_tid[tid] = tid;

@vm_thread_waiting_sp_sync[tid] = tid;

@vm_thread_in_STW[tid] = tid;

@STWing[pid] = pid;

printf("%d %-6d %-16s SafepointSynchronize::begin \n", elapsed / 1e6, tid, comm);

}

uprobe:/usr/lib/jvm/java-17-openjdk-amd64/lib/server/libjvm.so:*RuntimeService*record_safepoint_synchronized*

/@java_pid[pid]/

{

@sp_synchronized[tid, comm, ustack] = count();

delete (@vm_thread_waiting_sp_sync[tid]);

delete (@STWing[pid]);

printf("%d %-6d %-16s RuntimeService::record_safepoint_synchronized\n", elapsed / 1e6, tid, comm);

}

uretprobe:/usr/lib/jvm/java-17-openjdk-amd64/lib/server/libjvm.so:*SafepointSynchronize*end*

/@java_pid[pid]/

{

delete (@vm_thread_in_STW[tid]);

printf("%d %-6d %-16s SafepointSynchronize::end\n", elapsed / 1e6, tid, comm);

printf("===========\n\n");

}

// tracepoint:exceptions:page_fault_user

// /@java_pid[pid]/

// {

// @page_fault_user[tid, comm, kstack(8)] = count();

// printf("%d page_fault_user(\"%s %d\", address=0x%lX, ip=0x%lX)\n", elapsed / 1e6, comm, tid, args->address, args->ip);

// }

kprobe:filemap_fault

/@java_pid[pid]/

{

@filemap_fault[tid, comm, kstack(8)] = count();

printf("%d %-6d %-16s filemap_fault()\n", elapsed / 1e6, tid, comm);

@filemap_fault_kstack[ tid, comm, kstack(8)] = count();

@filemap_fault_ustack[ tid, comm, ustack(8)] = count();

}

kretfunc:filemap_fault

/@java_pid[pid]/

{

printf("%d %-6d %-16s kretfunc:filemap_fault()\n", elapsed / 1e6, tid, comm);

}

// tracepoint:block:block_rq_complete,

// tracepoint:block:block_bio_complete

// /@java_pid[pid]/

// {

// @block_bio_complete[pid, tid, comm, kstack(8)] = count();

// }

kretfunc:ext4_mpage_readpages

/@java_pid[pid]/

{

$page = args->page;

$node = args->inode;

$rac = args->rac;

$nr_pages = $rac->_nr_pages;

printf ("%d %-6d %-16s kretfunc:ext4_mpage_readpages %ld %s\n", elapsed / 1e6, tid, comm, $rac->_index * 4096, str($rac->file->f_path.dentry->d_name.name));

@ext4_mpage_readpages[ tid, comm, kstack(8)] = count();

// @ext4_mpage_readpages_ustack[ tid, comm, ustack(8)] = count();

}

// kprobe:ext4_mpage_readpages

// /@java_pid[pid]/

// {

// printf ("%d kprobe:ext4_mpage_readpages %d %s\n", elapsed / 1e6, tid, comm);

// }

// tracepoint:syscalls:sys_enter_sync,

// tracepoint:syscalls:sys_enter_syncfs,

// tracepoint:syscalls:sys_enter_fsync,

// tracepoint:syscalls:sys_enter_fdatasync,

// tracepoint:syscalls:sys_enter_sync_file_range*,

// tracepoint:syscalls:sys_enter_msync

// /@java_pid[pid]/

// {

// time("%H:%M:%S ");

// printf("sync syscall: %-6d %-16s %s\n", pid, comm, probe);

// // if( @vm_thread_waiting_sp_sync[tid] ) {

// @sync_call[pid, tid, comm, probe, ustack(6)] = count();

// printf("sp sync_call: %-6d %-16s %s\n", pid, comm, probe);

// // }

// }

END

{}執行

env LD_LIBRARY_PATH=/lib/libc6-prof/x86_64-linux-gnu /usr/lib/jvm/java-17-openjdk-amd64/bin/java '-Xlog:safepoint,gc=info:stdout:level,tags,time,uptime,pid' -XX:+UnlockDiagnosticVMOptions -XX:+PreserveFramePointer -Xms128M -Xmx128M -XX:+AlwaysPreTouch -cp . TestMMap /mnt/dm1/mapped.filesudo bpftrace ./trace-safepoint-mmap2.bt跟蹤輸出:

37507 568704 java filemap_fault()

37507 568704 java kretfunc:ext4_mpage_readpages 1026187264 mapped.file

38521 568704 java kretfunc:filemap_fault()

38531 568704 java filemap_fault()

38531 568704 java kretfunc:ext4_mpage_readpages 116322304 mapped.file

39549 568704 java kretfunc:filemap_fault()

39569 568704 java filemap_fault()

39569 568704 java kretfunc:ext4_mpage_readpages 960757760 mapped.file

40601 568704 java kretfunc:filemap_fault()

40632 568704 java filemap_fault() <--- Page Fault START

40632 568704 java kretfunc:ext4_mpage_readpages 484405248 mapped.file

41386 568719 VM Thread SafepointSynchronize::begin <-- waiting for safepoint reach START

41657 568704 java kretfunc:filemap_fault() <-- Page Fault END

41658 568719 VM Thread RuntimeService::record_safepoint_synchronized <-- waiting for safepoint reach END

41658 568719 VM Thread SafepointSynchronize::end

===========

41669 568704 java filemap_fault()

41669 568704 java kretfunc:ext4_mpage_readpages 43298816 mapped.file

42681 568704 java kretfunc:filemap_fault()

42691 568704 java filemap_fault()

42691 568704 java kretfunc:ext4_mpage_readpages 828358656 mapped.file

43709 568704 java kretfunc:filemap_fault()

43719 568704 java filemap_fault()

43719 568704 java kretfunc:ext4_mpage_readpages 191881216 mapped.file

44732 568704 java kretfunc:filemap_fault()

44743 568704 java filemap_fault() <--- Page Fault START

44743 568704 java kretfunc:ext4_mpage_readpages 905154560 mapped.file

45574 568719 VM Thread SafepointSynchronize::begin <-- waiting for safepoint reach START

45753 568704 java kretfunc:filemap_fault()) <-- Page Fault END

45754 568719 VM Thread RuntimeService::record_safepoint_synchronized <-- waiting for safepoint reach END

45755 568719 VM Thread SafepointSynchronize::end可見隱患的確存在。只是視環境條件是否滿足,爆不爆發而已。

如果用回沒有 IO 卡頓的盤試試:

env LD_LIBRARY_PATH=/lib/libc6-prof/x86_64-linux-gnu /usr/lib/jvm/java-17-openjdk-amd64/bin/java '-Xlog:safepoint,gc=info:stdout:level,tags,time,uptime,pid' -XX:+UnlockDiagnosticVMOptions -XX:+PreserveFramePointer -Xms128M -Xmx128M -XX:+AlwaysPreTouch -cp . TestMMap /tmp/mapped.file問題不存在。

雲原生下可能加劇的問題

-

worker node 共享內存 或 container limit 內存後,Direct Page Reclaim

在雲環境下,一台機器可能運行很多 container 。有的 container 是高 IO 的應用,如寫日誌,這些都會佔用 page cache 和對共享的 disk 產生爭用壓力 。 整機的內存不足,或者是 container 本身的內存不足,都可能觸發 Direct Page Reclaim

- worker node 共享本地磁盤 IO 爭用

- Ceph 等 PV 網絡盤穩定性遠不如本地盤,帶寬與 latency 相對不穩定。

如以下一個配置,多個 container 可能共享了 emptyDir 用的本地盤。然後在 IO 爭用時,寫 GC 日誌就可能出現 STW 下的高 latency ,直接導致服務 API latency 超時。

apiVersion: v1

kind: Pod

metadata:

annotations:

labels:

name: xyz

spec:

containers:

- command: java ... -Xloggc:/usr/share/myapp/logs/gc.log ...

...

volumeMounts:

- mountPath: /usr/share/myapp/logs

name: myapp-logs

volumes:

- emptyDir: {}

name: myapp-logs結語

後面,我會寫另外兩編文章:《eBPF 求證坊間傳聞:Java GC 日誌可導致整個 JVM 服務卡頓?》、《如何測量 進程級別的 IO 延遲》

附錄

Linux 如何模擬 IO 卡頓

網上能搜索到的模擬 IO 卡頓大概有兩個方法:

-

device mapper delay 法

- Emulate a slow block device with dm-delay - Christophe Vu-Brugier

- Emulate a slow block device with dm-delay - flamingbytes

- dm-delay

-

systemtap 注入延遲法

- 巧用Systemtap注入延遲模擬IO設備抖動

- 使用SystemTap給I/O設備註入延遲

本文用的是 device mapper delay 法。device 本身帶了一個 delay。

# load the brd kernel module. rd_nr is the maximum number of ramdisks. rd_size is the ramdisk size in KB.

sudo modprobe brd rd_nr=1 rd_size=1048576

ls -l /dev/ram0

brw-rw---- 1 root disk 1, 0 Aug 24 20:00 /dev/ram0

sudo blockdev --getsize /dev/ram0 # Display the size in 512-byte sectors

2097152

#We specify the read write delay as 200ms, specify the write latency(e.g. 100ms) as below.

size=$(sudo blockdev --getsize /dev/ram0)

sudo dmsetup remove delayed

echo "0 $size delay /dev/ram0 0 200" | sudo dmsetup create delayed

sudo dmsetup table delayed

0 2097152 delay 1:0 0 50

ls -la /dev/mapper/ | grep delayed

lrwxrwxrwx 1 root root 7 May 19 18:37 delayed -> ../dm-0

sudo mkfs.ext4 /dev/dm-0

sudo mkdir /mnt/dm1

sudo mount /dev/dm-0 /mnt/dm1

cd /mnt/dm1另外如果 debug 需要,可以掛起 device ,直接讓 mapper device 的所有 IO 操作進程掛起(uninterruptable_sleep):

# 掛起

sudo dmsetup suspend /dev/$your_dm_dev

# 恢復

sudo dmsetup resume /dev/$your_dm_devUbuntu 下的 BPF 跟蹤堆棧由於 -fno-omit-frame-pointer 的 glibc 缺失的問題

sudo apt install libc6-prof

env LD_LIBRARY_PATH=/lib/libc6-prof/x86_64-linux-gnu /usr/lib/jvm/java-17-openjdk-amd64/bin/java ...TL;DR :

https://bugs.launchpad.net/ubuntu/+source/glibc/+bug/1908307