前言

之前使用了iptables、ipvs,在數據包的必經之路(POSTROUTING)上攔截並且記錄日誌,本文使用一個比較成熟的組件envoy來記錄後端pod的真實ip

環境準備

環境準備如同之前

▶ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

backend-6d4cdd4c68-mqzgj 1/1 Running 4 8d 10.244.0.73 wilson <none> <none>

backend-6d4cdd4c68-qjp9m 1/1 Running 4 7d3h 10.244.0.74 wilson <none> <none>

nginx-test-54d79c7bb8-zmrff 1/1 Running 2 23h 10.244.0.75 wilson <none> <none>▶ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

backend-service ClusterIP 10.105.148.194 <none> 10000/TCP 8d

nginx-test NodePort 10.110.71.55 <none> 80:30785/TCP 14denvoy

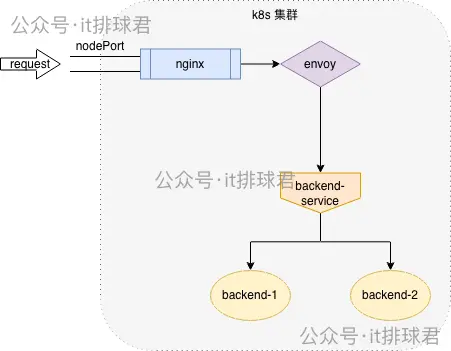

如同之前所説,需要有一個做負載均衡的組件來轉發到後端的多pod,之前使用的是iptables/ipvs,它們對於鏈路追蹤比較困難,那就要有一個組件來代替它們做負載均衡,所以該組件要麼在backend之前,要麼在nginx之後,那我們選擇在nginx之後

創建envoy configmap

apiVersion: v1

kind: ConfigMap

metadata:

name: envoy-config

data:

envoy.yaml: |

static_resources:

listeners:

- name: ingress_listener

address:

socket_address:

address: 0.0.0.0

port_value: 10000

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

http_protocol_options:

accept_http_10: true

common_http_protocol_options:

idle_timeout: 300s

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: app

domains: ["*"]

routes:

- match: { prefix: "/test" }

route:

cluster: app_service

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

access_log:

- name: envoy.access_loggers.stdout

typed_config:

"@type": type.googleapis.com/envoy.extensions.access_loggers.stream.v3.StdoutAccessLog

log_format:

text_format: "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %BYTES_SENT% %DURATION% %REQ(X-REQUEST-ID)% \"%REQ(USER-AGENT)%\" \"%REQ(X-FORWARDED-FOR)%\" %UPSTREAM_HOST% %UPSTREAM_CLUSTER% %RESPONSE_FLAGS%\n"

clusters:

- name: app_service

connect_timeout: 1s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: app_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: "backend-service"

port_value: 10000

admin:

access_log_path: "/tmp/access.log"

address:

socket_address:

address: 0.0.0.0

port_value: 9901

創建sidecar

與nginx-test同pod,通過patch的方式添加container

kubectl patch deployment nginx-test --type='json' -p='

[

{

"op": "add",

"path": "/spec/template/spec/volumes/-",

"value": {

"configMap": {

"defaultMode": 420,

"name": "envoy-config"

},

"name": "envoy-config"

}

},

{

"op": "add",

"path": "/spec/template/spec/containers/-",

"value": {

"args": [

"-c",

"/etc/envoy/envoy.yaml"

],

"image": "registry.cn-beijing.aliyuncs.com/wilsonchai/envoy:v1.32-latest",

"imagePullPolicy": "IfNotPresent",

"name": "envoy",

"ports": [

{

"containerPort": 10000,

"protocol": "TCP"

},

{

"containerPort": 9901,

"protocol": "TCP"

}

],

"volumeMounts": [

{

"mountPath": "/etc/envoy",

"name": "envoy-config"

}

]

}

}

]'▶ kubectl get pod -owide -l app=nginx-test

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-test-6df974c9f9-qksd4 2/2 Running 0 1d 10.244.0.80 wilson <none> <none>

在nginx-test pod中額外創建了envoy container,envoy打開了10000端口,並且envoy將訪問/test的請求都轉發到了backend-service:10000,現在需要將nginx-test的出流量轉發至envoy,讓envoy做負載均衡

修改nginx-test的upstream

將backend-service改成127.0.0.1,由於在同一個pod,同一個net namespace,直接用127即可

upstream backend_ups {

# server backend-service:10000;

server 127.0.0.1:10000;

}

server {

listen 80;

listen [::]:80;

server_name localhost;

location /test {

proxy_pass http://backend_ups;

}

}

重啓後生效

驗證

curl 10.22.12.178:30785/test,並且監控envoy的日誌kubectl logs -f -l app=nginx-test -c envoy

[2025-12-16T09:45:56.365Z] "GET /test HTTP/1.0" 200 40 0 99032619-a060-481d-8f0d-9d773fad9b12 "curl/7.81.0" "-" 10.105.148.194:10000 app_service -咦?upstream_host怎麼顯示得還是10.105.148.194,這是service ip,並不是後端pod的真實ip,這是怎麼回事??

問題解決

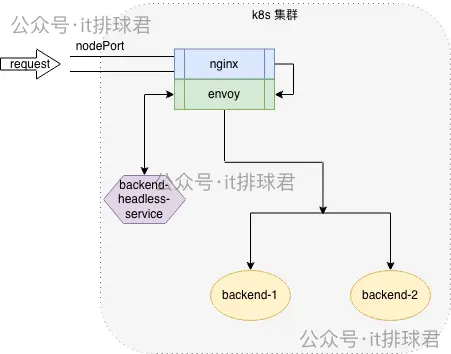

仔細回想一下負載均衡的工作原理:根據不同的算法(如:rr,wlc等)轉發到後端的real server,但是當前提供的後端,依然是k8s的service:backend-service,所以這種配置方式,雖然加了一層envoy,但是本質依然還是使用k8s service作為負載均衡

k8s service不但提供了負載均衡的作用,還有個重要的功能,就是服務發現,所以必須要使用service來做服務發現,又不能使用service的負載均衡

所幸k8s提供了headless service來滿足這種需求,訪問headless service,返回一組running的pod列表,讓訪問者自定義做負載均衡,ok,那就使用headless試試

apiVersion: v1

kind: Service

metadata:

name: backend-headless-service

spec:

clusterIP: None

selector:

app: backend

ports:

- name: http

port: 10000

targetPort: 10000這裏的clusterIP: None是創建headless service的關鍵

再修改envoy的配置文件,將轉發修改為headless service

...

clusters:

- name: app_service

connect_timeout: 1s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: app_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: "backend-headless-service"

port_value: 10000

...[2025-12-16T10:05:56.365Z] "GET /test HTTP/1.0" 200 40 0 2b029187-cddb-4278-99b8-2953a7e841a0 "curl/7.81.0" "-" 10.244.0.81:10000 app_service -

[2025-12-16T10:05:57.453Z] "GET /test HTTP/1.0" 200 40 1 384f9394-7ff9-4abb-b0f8-f9b69f2ba992 "curl/7.81.0" "-" 10.244.0.82:10000 app_service -確實已經轉發到後端真實pod ip去了

小結

當前的架構:

每個pod都有一個envoy sidecar,想要節約envoy資源,可以多個pod使用一個envoy,將envoy部署為daemonset,每個節點一個,然後調度到該節點的pod都轉發到該envoy即可

聯繫我

- 聯繫我,做深入的交流

至此,本文結束

在下才疏學淺,有撒湯漏水的,請各位不吝賜教...