前言

之前使用nginx-ingress-controller來記錄後端真實ip,但是有位老哥説了,我沒有用nginx-ingress-controller,而是用的原生nginx,這時候又當如何記錄後端真實ip的問題呢

環境準備

nginx:

upstream backend_ups {

server backend-service:10000;

}

server {

listen 80;

listen [::]:80;

server_name localhost;

location /test {

proxy_pass http://backend_ups;

}

}

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-test

namespace: default

spec:

selector:

matchLabels:

app: nginx-test

template:

metadata:

labels:

app: nginx-test

spec:

containers:

- image: registry.cn-beijing.aliyuncs.com/wilsonchai/nginx:latest

imagePullPolicy: IfNotPresent

name: nginx-test

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- mountPath: /etc/nginx/conf.d/default.conf

name: nginx-config

subPath: default.conf

- mountPath: /etc/nginx/nginx.conf

name: nginx-base-config

subPath: nginx.conf

volumes:

- configMap:

defaultMode: 420

name: nginx-config

name: nginx-config

- configMap:

defaultMode: 420

name: nginx-base-config

name: nginx-base-config

---

apiVersion: v1

kind: Service

metadata:

name: nginx-test

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-test

type: NodePort

backend:

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

spec:

containers:

- image: backend-service:v1

imagePullPolicy: Never

name: backend

ports:

- containerPort: 10000

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: backend-service

namespace: default

spec:

ports:

- port: 10000

protocol: TCP

targetPort: 10000

selector:

app: backend

type: ClusterIP

部署完畢,檢查一下

▶ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

backend-6d4cdd4c68-mqzgj 1/1 Running 0 6m3s 10.244.0.64 wilson <none> <none>

backend-6d4cdd4c68-qjp9m 1/1 Running 0 6m5s 10.244.0.66 wilson <none> <none>

nginx-test-b9bcf66d7-2phvh 1/1 Running 0 6m20s 10.244.0.67 wilson <none> <none>

▶ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

backend-service ClusterIP 10.105.148.194 <none> 10000/TCP 5m

nginx-test NodePort 10.110.71.55 <none> 80:30785/TCP 5m2s

現在的架構大概是這個樣子:

嘗試訪問一下nginx:

▶ curl 127.0.0.1:30785/test

i am backend in backend-6d4cdd4c68-qjp9m

已經反向代理到後端,再看看nginx日誌

10.244.0.1 - - [04/Dec/2025:07:27:17 +0000] "GET /test HTTP/1.1" 200 10.105.148.194:10000 40 "-" "curl/7.81.0" "-"

- 不出意外的,其中10.105.148.194是backend-service的ip,並非是pod ip

- 在nginx配置中,upstream的配置是backend-service,使用了k8s的service做了負載均衡,所以在nginx這層無論如何也是拿不到後端pod的ip的

- 現在需要在真正負載均衡那一層把日誌打開,就可以看到轉發的real server了

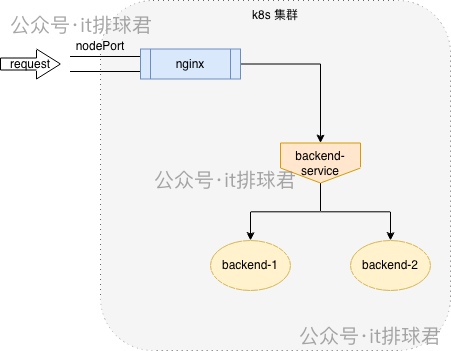

iptables

如果用的是iptables做的轉發,那就需要複習一下iptables的轉發原理了

- 當包進入網卡之後,就進入了PREROUTING

- 其次進入路由,根據目的地址,就分為兩個部分:

- 進入本機,走INPUT,隨後進入更高層的應用程序處理

- 非本機的包,進入FORWARD,再進入POSTROUTING

規則探索

我們來看看具體的規則:

-

首先先檢查PREROUTING,就發現了k8s添加的鏈表,

KUBE-SERVICES▶ sudo iptables -L PREROUTING -t nat Chain PREROUTING (policy ACCEPT) target prot opt source destination KUBE-SERVICES all -- anywhere anywhere /* kubernetes service portals */ ... -

繼續檢查

KUBE-SERVICES,發現了很多service的規則▶ sudo iptables -L KUBE-SERVICES -t nat Chain KUBE-SERVICES (2 references) target prot opt source destination KUBE-SVC-W67AXLFK7VEUVN6G tcp -- anywhere 10.110.71.55 /* default/nginx-test cluster IP */ KUBE-SVC-EDNDUDH2C75GIR6O tcp -- anywhere 10.98.224.124 /* ingress-nginx/ingress-nginx-controller:https cluster IP */ KUBE-SVC-ERIFXISQEP7F7OF4 tcp -- anywhere 10.96.0.10 /* kube-system/kube-dns:dns-tcp cluster IP */ KUBE-SVC-JD5MR3NA4I4DYORP tcp -- anywhere 10.96.0.10 /* kube-system/kube-dns:metrics cluster IP */ KUBE-SVC-ZZAJ2COS27FT6J6V tcp -- anywhere 10.105.148.194 /* default/backend-service cluster IP */ KUBE-SVC-NPX46M4PTMTKRN6Y tcp -- anywhere 10.96.0.1 /* default/kubernetes:https cluster IP */ KUBE-SVC-CG5I4G2RS3ZVWGLK tcp -- anywhere 10.98.224.124 /* ingress-nginx/ingress-nginx-controller:http cluster IP */ KUBE-SVC-EZYNCFY2F7N6OQA2 tcp -- anywhere 10.101.164.9 /* ingress-nginx/ingress-nginx-controller-admission:https-webhook cluster IP */ KUBE-SVC-TCOU7JCQXEZGVUNU udp -- anywhere 10.96.0.10 /* kube-system/kube-dns:dns cluster IP */ -

找到目標service:backend-service對應的鏈規則

KUBE-SVC-ZZAJ2COS27FT6J6V,繼續檢查▶ sudo iptables -L KUBE-SVC-ZZAJ2COS27FT6J6V -t nat Chain KUBE-SVC-ZZAJ2COS27FT6J6V (1 references) target prot opt source destination KUBE-MARK-MASQ tcp -- !10.244.0.0/16 10.105.148.194 /* default/backend-service cluster IP */ KUBE-SEP-GYMSSX4TMUPRH3OB all -- anywhere anywhere /* default/backend-service -> 10.244.0.64:10000 */ statistic mode random probability 0.50000000000 KUBE-SEP-EZWMSI6QFXP3WRHV all -- anywhere anywhere /* default/backend-service -> 10.244.0.66:10000 */ -

這裏已經有明顯的結論了,有2條鏈,對應的了兩個pod,再深入檢查其中一條鏈,

KUBE-SEP-GYMSSX4TMUPRH3OB▶ sudo iptables -L KUBE-SEP-GYMSSX4TMUPRH3OB -t nat Chain KUBE-SEP-GYMSSX4TMUPRH3OB (1 references) target prot opt source destination KUBE-MARK-MASQ all -- 10.244.0.64 anywhere /* default/backend-service */ DNAT tcp -- anywhere anywhere /* default/backend-service */ tcp to:10.244.0.64:10000 -

從這裏的規則能夠知曉,進入

KUBE-SEP-GYMSSX4TMUPRH3OB,會進行DNAT轉換,把目標的ip轉換成:10.244.0.64;那同理可知,另外一條鏈會將目標ip轉換成:10.244.0.66。所以我們只需要在這個地方加入日誌記錄,就可以 追蹤對端的ip是哪個了sudo iptables -t nat -I KUBE-SEP-GYMSSX4TMUPRH3OB 1 -j LOG --log-prefix "backend-service-pod: " --log-level 4 sudo iptables -t nat -I KUBE-SEP-EZWMSI6QFXP3WRHV 1 -j LOG --log-prefix "backend-service-pod: " --log-level 4 -

來看下整體的效果

▶ sudo iptables -L KUBE-SEP-GYMSSX4TMUPRH3OB -t nat Chain KUBE-SEP-GYMSSX4TMUPRH3OB (1 references) target prot opt source destination LOG all -- anywhere anywhere LOG level warning prefix "backend-service-pod: " KUBE-MARK-MASQ all -- 10.244.0.64 anywhere /* default/backend-service */ DNAT tcp -- anywhere anywhere /* default/backend-service */ tcp to:10.244.0.64:10000 wilson.chai-ubuntu [ 17:18:03 ] /usr/src/trojan ▶ sudo iptables -L KUBE-SEP-EZWMSI6QFXP3WRHV -t nat Chain KUBE-SEP-EZWMSI6QFXP3WRHV (1 references) target prot opt source destination LOG all -- anywhere anywhere LOG level warning prefix "backend-service-pod: " KUBE-MARK-MASQ all -- 10.244.0.66 anywhere /* default/backend-service */ DNAT tcp -- anywhere anywhere /* default/backend-service */ tcp to:10.244.0.66:10000 -

都已經做了對應的日誌記錄了,開始測試

- 請求nginx:

curl 127.0.0.1:30785/test - 查看日誌

tail -f /var/log/syslog | grep backend-service Dec 4 17:17:30 wilson kernel: [109258.569426] backend-service-pod: IN=cni0 OUT= PHYSIN=veth1dc60dd3 MAC=76:d8:f3:a1:f8:1b:a2:79:4b:23:58:d8:08:00 SRC=10.244.0.70 DST=10.105.148.194 LEN=60 TOS=0x00 PREC=0x00 TTL=64 ID=64486 DF PROTO=TCP SPT=51596 DPT=10000 WINDOW=64860 RES=0x00 SYN URGP=0

- 請求nginx:

什麼?!為什麼DST依然顯示的是10.105.148.194

問題解決

-

再次查看鏈規則,在日誌記錄的時候,還沒有做DNAT,所以DST依然是service ip,但是如果將LOG規則寫在DNAT之後,那DNAT匹配之後就不會再進入LOG,那依然不能記錄

▶ sudo iptables -L KUBE-SEP-GYMSSX4TMUPRH3OB -t nat Chain KUBE-SEP-GYMSSX4TMUPRH3OB (1 references) target prot opt source destination LOG all -- anywhere anywhere LOG level warning prefix "backend-service-pod: " KUBE-MARK-MASQ all -- 10.244.0.64 anywhere /* default/backend-service */ DNAT tcp -- anywhere anywhere /* default/backend-service */ tcp to:10.244.0.64:10000 -

還是要回到iptables的工作原理,我們的請求先進入

PREROUTING鏈,再進入FORWARD鏈,最後進入POSTROUTING鏈,請求進入在PREROUTING中已經進行了DNAT的轉換,那其實就可以在後面兩個鏈表中記錄日誌。這裏選擇在POSTROUTING中記錄日誌iptables -t nat -I POSTROUTING -p tcp -d 10.244.0.64 -j LOG --log-prefix "backend-service: " iptables -t nat -I POSTROUTING -p tcp -d 10.244.0.66 -j LOG --log-prefix "backend-service: "▶ sudo iptables -L POSTROUTING -t nat Chain POSTROUTING (policy ACCEPT) target prot opt source destination LOG tcp -- anywhere 10.244.0.66 LOG level warning prefix "backend-service: " LOG tcp -- anywhere 10.244.0.64 LOG level warning prefix "backend-service: " ... -

在測試一次

Dec 4 17:33:23 wilson kernel: [110211.770728] backend-service: IN= OUT=cni0 PHYSIN=veth1dc60dd3 PHYSOUT=vetha515043b SRC=10.244.0.70 DST=10.244.0.64 LEN=60 TOS=0x00 PREC=0x00 TTL=64 ID=59709 DF PROTO=TCP SPT=33454 DPT=10000 WINDOW=64860 RES=0x00 SYN URGP=0 Dec 4 17:33:24 wilson kernel: [110213.141975] backend-service: IN= OUT=cni0 PHYSIN=veth1dc60dd3 PHYSOUT=veth0a8a2dd3 SRC=10.244.0.70 DST=10.244.0.66 LEN=60 TOS=0x00 PREC=0x00 TTL=64 ID=54719 DF PROTO=TCP SPT=33468 DPT=10000 WINDOW=64860 RES=0x00 SYN URGP=0

iptables小結

這個測試驗證了,用linux的iptables規則,依然可以追蹤每一條請求的鏈路,並且回顧了iptables的工作原理,以及探索了k8s基於iptables的轉發規則的原理,但是這裏有幾個問題

- 一旦pod的ip變化,那日誌規則也要改變,這在pod隨時變化的環境中,需要大量精力維護

- 新增k8s node 節點,依然需要手動添加規則,這也需要大量維護

- 這個方法涉及到了根本的轉發規則,一旦處理不當,錯誤的增刪改,將導致不可預知的風險,甚至集羣由此崩潰

所以這種方法是不太能夠在生產環境當中使用的

在這裏做了詳細的分析,只是為了證明我們的思路沒錯,只要在負載均衡那一層進行日誌記錄,就能拿到real server

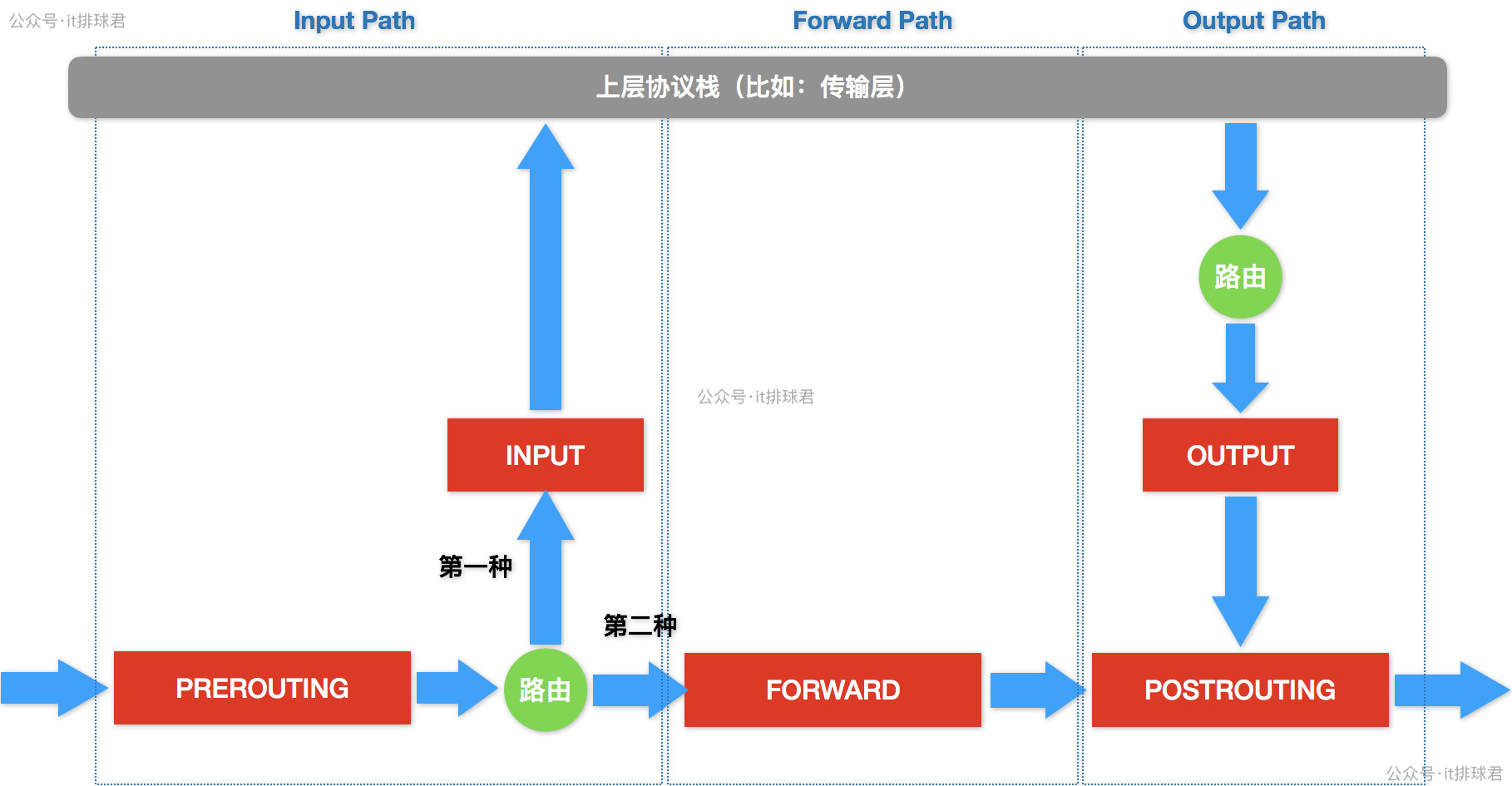

ipvs

ipvs 是內核轉發,並不會記錄訪問日誌,但是依然可以使用 iptables的方法來記錄,就是在POSTROUTING鏈上記錄日誌

如果k8s的轉發模式是ipvs,並不意味着只需要用ipvs就可以完成轉發工作,它需要ipvs與iptables共同協作才能完成

內置於Linux內核中的核心技術模塊,它工作在Netfilter框架的INPUT之前的hook點

當請求的目標ip是ipvs的vip時,ipvs就會接入工作,將數據包“劫持”過來,然後進行規則匹配並修改,比如進行DNAT

TCP 10.105.148.194:10000 rr

-> 10.244.0.73:10000 Masq 1 0 0

-> 10.244.0.74:10000 Masq 1 0 0

- 如果修改後的目標ip就是本機ip,那ipvs就直接交給上層應用程序處理

- 如果修改後的目標ip不是本機ip,那該數據包會重新進入路由,然後通過FORWARD,POSTROUTING轉發出去

小結

本文複習了linux傳統的知識點,iptables與ipvs的工作原理,

並且詳細討論了,如果不加任何插件的情況下,使用操作系統自帶的追蹤方式查看後端真實的pod,但是這些都不適合在生產環境使用,因為它太底層了,日常的操作不應該去操作底層的配置,就算要用,也應該做一些自動化的腳 本或者插件封裝一次才能使用

在不使用nginx-ingress-controller,或者我想記錄服務間轉發的真實pod,有沒有可以直接使用的插件或者組件幫我們完成這個工作呢,答案肯定是有的,那這就是下一期的內容,敬請期待

聯繫我

- 聯繫我,做深入的交流

至此,本文結束

在下才疏學淺,有撒湯漏水的,請各位不吝賜教...